Seeing the Forest Through the Trees: Brookhaven Lab Scientists Develop New Computational Approach to Reduce Noise in X-ray Data

Software developed at NSLS-II greatly improves data quality for a versatile x-ray technique

April 18, 2022

Scientists from the National Synchrotron Light Source II (NSLS-II) and Computational Science Initiative (CSI) at the U.S. Department of Energy’s (DOE) Brookhaven National Laboratory have helped to solve a common problem in synchrotron x-ray experiments: reducing the noise, or meaningless information, present in data. Their work aims to improve the efficiency and accuracy of x-ray studies at NSLS-II, with the goal of enhancing scientists’ overall research experience at the facility.

NSLS-II, a DOE Office of Science user facility, produces x-ray beams for the study of a huge variety of samples, from potential new battery materials to plants that can remediate contaminated soil. Researchers from across the nation and around the globe come to NSLS-II to investigate their samples using x-rays, collecting huge amounts of data in the process. One of the many x-ray techniques available at NSLS-II to visiting researchers is x-ray photon correlation spectroscopy (XPCS). XPCS is typically used to study material behaviors that are time-dependent and take place at the nanoscale and below, such as the dynamics between and within structural features, like tiny grains. XPCS has been used, for example, to study magnetism in advanced computing materials and structural changes in polymers (plastics).

While XPCS is a powerful technique for gathering information, the quality of the data collected and range of materials that can be studied is limited by the “flux” of the XPCS x-ray beam. Flux is a measure of the number of x-rays passing through a given area at a point in time, and high flux can lead to too much “noise” in the data, masking the signal the scientists are seeking. Efforts to reduce this noise have been successful for certain experimental setups. But for some types of XPCS experiments, achieving a more reasonable signal-to-noise ratio is a big challenge.

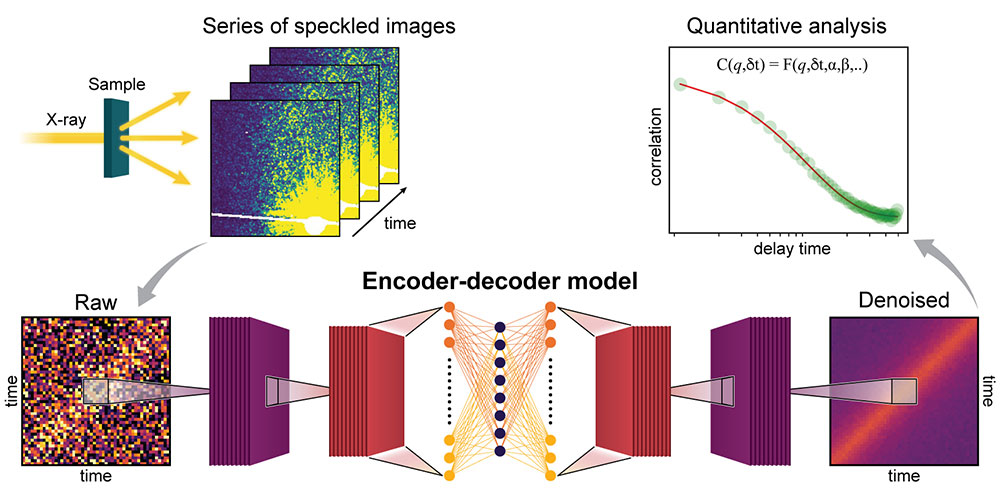

In XPCS, x-rays scatter off the sample and yield a speckle pattern. Researchers take many sequential images of the pattern and analyze them to find correlations between their intensities. These correlations yield information about processes within the sample that are time-dependent, such as how its structure might relax or reorganize. But when the images are noisy, this information is more difficult to extract.

For this project, the team set out to create new methods and models using machine learning (ML), a type of artificial intelligence where computer programs and systems can self-learn a solution to a problem and adapt based on the data they receive. The project involves staff from two NSLS-II beamlines, Coherent X-ray Scattering (CSX) and Coherent Hard X-ray Scattering (CHX), as well as NSLS-II’s Data Science and Systems Integration (DSSI) program and Brookhaven’s CSI group.

“While instrumentation development and optimization of experimental protocols are crucial in noise reduction, there are situations where computational methods can advance the improvements even further,” said NSLS-II computational researcher Tatiana Konstantinova. She is the first author of the paper, which appeared in the July 20, 2021 online edition of Nature’s Scientific Reports.

Konstantinova and her colleagues want to create models that can be applied to a variety of XPCS experiments. They also want the models to be usable at different stages of a project, from data collection to comprehensive analysis of the final results. This project is an example of the kind of innovative problem-solving that can result from open and collaborative mindsets.

“Beamtime at facilities like NSLS-II is a finite resource. Therefore, aside from advances in experimental hardware, the only way to improve the scientific productivity overall is by working on generalizable and scalable solutions to extract meaningful data, as well as to help users be more confident in the results,” said NSLS-II beamline scientist Andi Barbour, a principal investigator for the project. “We want users to be able to spend more time thinking about science.”

enlarge

enlarge

A graphic depiction of the machine learning model, showing the series of XPCS images (top left), which are fed into the machine learning model (bottom), yielding the denoised data (top right) that are used for further analysis

In XPCS analysis, data are represented mathematically by what is known as a two-time intensity-intensity correlation function. This function can generalize any time-dependent system behavior and outputs a data set. Here, these data were used as the input for the group’s ML model. From there, they had to determine how the model would process the data. To make a decision, the team looked to established computational approaches for removing noise. Specifically, they investigated approaches based on a subset of artificial neural networks, known as “autoencoder” models. Autoencoders can train themselves to reconstruct data into more compact versions and be modified to address noise by replacing noisy targets with noise-free input signals.

The downside of many ML applications is the significant resources it takes to train, store, and apply models. Ideally, models are as simple as possible while also producing the desired functionality. This is especially true for scientific applications where expertise in the specific domain is required for collection and selection of training examples.

The group trained their model using real experimental data collected at CHX. They used different samples, data acquisition rates, and temperatures, with each data run containing between 200 and 1,000 frames. They found that the selected model architecture makes them fast to train and does not require an extensive amount of training data or computing resources during its application. These advantages provide an opportunity to tune the models to a specific experiment in several minutes using a laptop equipped with a graphics processing unit.

enlarge

enlarge

From left, Lutz Wiegart, Maksim Rakitin, and Andi Barbour stand above the NSLS-II beamline floor

“Our models can extract meaningful data from images that contain a high level of noise, which would otherwise require a lot of tedious work for researchers to process,” said Anthony DeGennaro, a computational scientist with CSI who is also a principal investigator for the project. “We think that they will be able to serve as plug-ins for autonomous experiments, such as by stopping measurements when enough data have been collected or by acting as input for other experimental models.”

In ongoing and future work, the group will extend the model’s capabilities and integrate it into XPCS data analysis workflows at CHX and CSX. They are investigating how to use their denoising model for identifying instrumental instabilities during measurements, as well as heterogeneities or other unusual dynamics in XPCS data that are inherent to the sample. Detecting anomalous observations, such as suspicious behavior in surveillance videos or credit card fraud, is another common application of autoencoder models, which also can be applied to automated data collection or analysis.

The complete research team included DSSI computational scientist Maksim Rakitin and beamline scientist Lutz Wiegart, both co-authors of the paper. This research used Bluesky, a software library designed for experimental control and data collection largely developed by NSLS-II, as well as open-source Python code libraries developed by the scientific community, including Jupyter and Dask.

Project Jupyter is a non-profit, open-source project and is developed in the open on GitHub, through the consensus of the Jupyter community. For more information on Jupyter, please visit their About Website.

Dask is a fiscally sponsored project of NumFOCUS, a nonprofit dedicated to supporting the open source scientific computing community.

Brookhaven National Laboratory is supported by the U.S. Department of Energy’s Office of Science. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit https://www.energy.gov/science/.

2022-19533 | INT/EXT | Newsroom