Upgrades to ATLAS and LHC Magnets for Run 2 and Beyond

Brookhaven physicists play critical roles in LHC restart and plans for the future of particle physics

July 6, 2015

enlarge

enlarge

The ATLAS detector at the Large Hadron Collider, an experiment with large involvement from physicists at Brookhaven National Laboratory. Image credit: CERN

At the beginning of June, the Large Hadron Collider at CERN, the European research facility, began smashing together protons once again. The high-energy particle collisions taking place deep underground along the border between Switzerland and France are intended to allow physicists to probe the furthest edges of our knowledge of the universe and its tiniest building blocks.

The Large Hadron Collider returns to operations after a two-year offline period, Long Shutdown 1, which allowed thousands of physicists worldwide to undertake crucial upgrades to the already cutting-edge particle accelerator. The LHC now begins its second multi-year operating period, Run 2, which will take the collider through 2018 with collision energies nearly double those of Run 1. In other words, Run 2 will nearly double the energies that allowed researchers to detect the long-sought Higgs Boson in 2012.

"We can make the trigger more sophisticated to be more selective. If we don't select the right events, they are gone forever."

— Brookhaven physicist Howard Gordon

The U.S. Department of Energy’s Brookhaven National Laboratory is a crucial player in the physics program at the Large Hadron Collider, in particular as the U.S. host laboratory for the pivotal ATLAS experiment, one of the two large experiments that discovered the Higgs. Physicists at Brookhaven were busy throughout Long Shutdown 1, undertaking projects designed to maximize the LHC’s chances of detecting rare new physics as the collider reaches into a previous unexplored subatomic frontier.

While the technology needed to produce a new particle is a marvel on its own terms, equally remarkable is everything the team at ATLAS and other experiments must do to detect these potentially world-changing discoveries. Because the production of such particles is a rare phenomenon, it isn’t enough to just be able to smash one proton into another. The LHC needs to be able to collide proton bunches, each bunch consisting of hundreds of billions of particles, every 50 nanoseconds—eventually rising to every 25 nanoseconds in Run 2—and be ready to sort through the colossal amounts of data that all those collisions produce.

It is with those interwoven challenges—maximizing the number of collisions within the LHC, capturing the details of potentially noteworthy collisions, and then managing the gargantuan amount of data those collisions produce—that scientists at Brookhaven National Laboratory are making their mark on the Large Hadron Collider and its search for new physics—and not just for the current Run 2, but looking forward to the long-term future operation of the collider.

Restarting the Large Hadron Collider

enlarge

enlarge

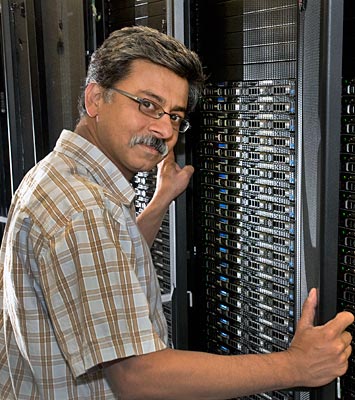

Brookhaven physicist Srini Rajagopalan, operation program manager for U.S. ATLAS, works to keep manageable the colossal amounts of data that are generated by the Large Hadron Collider and sent to Brookhaven's RHIC and ATLAS Computing Facility.

The Large Hadron Collider is the largest single machine in the world, so it’s tempting to think of its scale just in terms of its immense size. The twin beamlines of the particle accelerator sit about 300 to 600 feet underground in a circular tunnel more than 17 miles around. Over 1,600 magnets, each weighing more than 25 tons, are required to keep the beams of protons focused and on the correct paths, and nearly 100 tons of liquid helium is necessary to keep the magnets operating at temperatures barely above absolute zero. Then there are the detectors, each of which stand several stories high.

But the scale of the LHC extends not just in space, but in time as well. A machine of this size and complexity doesn’t just switch on or off with the push of a button, and even relatively simple maintenance can require weeks, if not months, to perform. That’s why the LHC recently completed Long Shutdown 1, a two-year offline period in which physicists undertook the necessary repairs and upgrades to get the collider ready for the next three years of near-continuous operation. As the U.S. host laboratory for the ATLAS experiment, Brookhaven National Laboratory was pivotal in upgrading and improving one of the cornerstones of the LHC apparatus.

“After having run for three years, the detector needs to be serviced much like your car,” said Brookhaven physicist Srini Rajagopalan, operation program manager for U.S. ATLAS. “Gas leaks crop up that need to be fixed. Power supplies, electronic boards and several other components need to be repaired or replaced. Hence a significant amount of detector consolidation work occurs during the shutdown to ensure an optimal working detector when beam returns.”

Beyond these vital repairs, the major goal of the upgrade work during Long Shutdown 1 was to increase the LHC’s center of mass energies from the previous 8 trillion electron volts (TeV) to 13 TeV, near the operational maximum of 14 TeV.

“Upgrading the energy means you’re able to probe much higher mass ranges, and you have access to new particles that might be substantially heavier,” said Rajagopalan. “If you have a very heavy particle that cannot be produced, it doesn’t matter how much data you collect, you just cannot reach that. That’s why it was very important to go from 8 to 13 TeV. Doubling the energy allows us to access the new physics much more easily.”

As the LHC probes higher and higher energies, the phenomena that the researchers hope to observe will happen more and more rarely, meaning the particle beams need to create many more collisions than they did before. Beyond this increase in collision rates, or luminosity, however, the entire infrastructure of data collection and management has to evolve to deal with the vastly increased volume of information the LHC can now produce.

“Much of the software had to be evolved or rewritten,” said Rajagopalan, “from patches and fixes that are more or less routine software maintenance to implementing new algorithms and installing new complex data management systems capable of handling the higher luminosity and collision rates.”

Making More Powerful Magnets

enlarge

enlarge

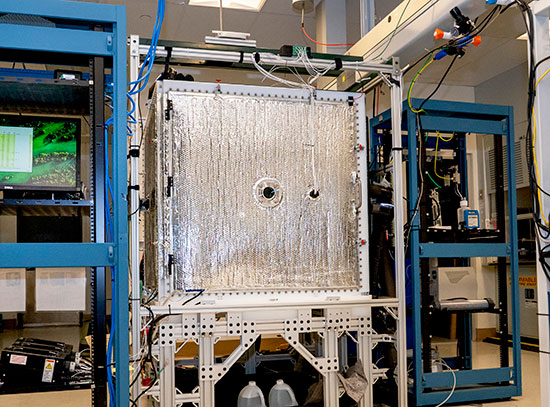

Brookhaven physicist Peter Wanderer, head of the laboratory's Superconducting Magnet Division, stands in front of the oven in which niobium tin is made into a superconductor.

The Large Hadron Collider works by accelerating twin beams of protons to speeds close to that of light. The two beams, traveling in opposite directions along the path of the collider, both contain many bunches of protons, with each bunch containing about 100 billion protons. When the bunches of protons meet, not all of the protons inside of them are going to interact and only a tiny fraction of the colliding bunches are likely to yield potentially interesting physics. As such, it’s absolutely vital to control those beams to maximize the chances of useful collisions occurring.

The best way to achieve that and the desired increase in luminosity—both during the current Run 2, and looking ahead to the long-term future of the LHC—is to tighten the focus of the beam. The more tightly packed protons are, the more likely they’ll smash into each other. This means working with the main tool that controls the beam inside the accelerator: the magnets.

“Most of the length of the circumference along a circular machine like the LHC is taken up with a regular sequence of magnets,” said Peter Wanderer, head of Brookhaven Lab’s Superconducting Magnet Division, which made some of the magnets for the current LHC configuration and is working on new designs for future upgrades. “The job of these magnets is to bend the proton beams around to the next point or region where you can do something useful with them, like produce collisions, without letting the beam get larger.”

A beam of protons is a bunch of positively charged particles that all repel one another, so they want to move apart, he explained. So physicists use the magnetic fields to keep the particles from being able to move away from the desired path.

“You insert different kinds of magnets, different sequences of magnets, in order to make the beams as small as possible, to get the most collisions possible when the beams collide,” Wanderer said.

The magnets currently in use in the LHC are made of the superconducting material niobium titanium (NbTi). When the electromagnets are cooled in liquid helium to temperatures of about 4 Kelvin (-452.5 degrees Fahrenheit), they lose all electric resistance and are able to achieve a much higher current density compared with a conventional conductor like copper. A magnetic field gets stronger as its current is more densely packed, meaning a superconductor can produce a much stronger field over a smaller radius than copper.

But there’s an upper limit to how high a field the present niobium titanium superconductors can reach. So Wanderer and his team at Brookhaven have been part of a decade-long project to refine the next generation of superconducting magnets for a future upgrade to the LHC. These new magnets will be made from niobium tin (Nb3Sn).

“Niobium tin can go to higher fields than niobium titanium, which will give us even stronger focusing,” Wanderer said. “That will allow us to get a smaller beam, and even more collisions.” Niobium tin can also function at a slightly higher temperature, so the new magnets will be easier to cool than those currently in use.

There are a few catches. For one, niobium tin, unlike niobium titanium, isn’t initially superconducting. The team at Brookhaven has to first heat the material for two days at 650 degrees Celsius (1200 degrees Fahrenheit) before beginning the process of turning the raw materials into the wires and cables that make up an electromagnet.

“And when niobium tin becomes a superconductor, then it’s very brittle, which makes it really challenging,” said Wanderer. “You need tooling that can withstand the heat for two days. It needs to be very precise, to within thousandths of an inch, and when you take it out of the tooling and want to put it into a magnet, and wrap it with iron, you have to handle it very carefully. All that adds a lot to the cost. So one of the things we’ve worked out over 10 years is how to do it right the first time, almost always.”

Fortunately, there’s still time to work out any remaining kinks. The new niobium tin magnets aren’t set to be installed at the LHC until around 2022, when the changeover from niobium titanium to niobium tin will be a crucial part of converting the Large Hadron Collider into the High-Luminosity Large Hadron Collider (HL-LHC).

Managing Data at Higher Luminosity

enlarge

enlarge

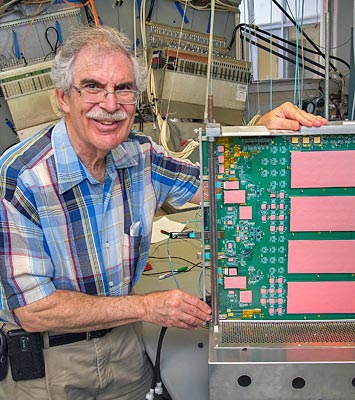

Brookhaven physicist Howard Gordon, a leader in the ATLAS physics program, was the recent recipient of the U.S. ATLAS Lifetime Achievement Award.

As the luminosity of the LHC increases in Run 2 and beyond, perhaps the biggest challenge facing the ATLAS team at Brookhaven lies in recognizing a potentially interesting physics event when it occurs. That selectivity is crucial, because even CERN’s worldwide computing grid—which includes about 170 global sites, and of which Brookhaven’s RHIC and ATLAS Computing Facility is a major center—can only record the tiniest fraction of over 100 million collisions that occur each second. That means it’s just as important to quickly recognize the millions of events that don’t need to be recorded as it is to recognize the handful that do.

“What you have to do is, on the fly, analyze each event and decide whether you want to save it to disk for later use or not,” said Rajagopalan. “And you have to be careful you don’t throw away good physics events. So you’re looking for signatures. If it’s a good signature, you say, ‘Save it!’ Otherwise, you junk it. That’s how you bring the data rate down to a manageable amount you can write to disk.”

Physicists screen out unwanted data using what’s known as a trigger system. The principle is simple: as the data from each collision comes in, it’s analyzed for a preset signature pattern, or trigger, that would mark it as potentially interesting.

“We can change the trigger, or make the trigger more sophisticated to be more selective,” said Brookhaven’s Howard Gordon, a leader in the ATLAS physics program. “If we don’t select the right events, they are gone forever.”

The current trigger system can handle the luminosities of Run 2, but with future upgrades it will no longer be able to screen out and reject enough collisions to keep the number of recorded events manageable. So the next generation of ATLAS triggers will have to be even more sophisticated in terms of what they can instantly detect—and reject.

A more difficult problem comes with the few dozen events in each bunch of protons that look like they might be interesting, but aren’t.

“Not all protons in a bunch interact, but it’s not necessarily going to be only one proton in a bunch that interacts with a proton from the opposite bunch,” said Rajagopalan. “You could have 50 of them interact. So now you have 50 events on top of each other. Imagine the software challenge when just one of those is the real, new physics we’re interested in discovering, but you have all these 49 others—junk!—sitting on top of it.”

“We call it pileup!” Gordon quipped.

Finding one good result among 50 is tricky enough, but in 10 years that number will be closer to 1 in 150 or 200, with all those additional extraneous results interacting with each other and adding exponentially to the complexity of the task. Being able to recognize instantly as many characteristics of the desired particles as possible will go a long way to keeping the data manageable.

Further upgrades are planned over the next decade to cope with the ever-increasing luminosity and collision rates. For example, the Brookhaven team and collaborators will be working to develop an all-new silicon tracking system and a full replacement of the readout electronics with state-of-the-art technology that will allow physicists to collect and analyze ten times more data for LHC Run 4, scheduled for 2026.

The physicists at CERN, Brookhaven, and elsewhere have strong motivation for meeting these challenges. Doing so will not only offer the best chance of detecting rare physics events and expanding the frontiers of physics, but would allow the physicists to do it within a reasonable timespan.

As Rajagopalan put it, “We are ready for the challenge. The next few years are going to be an exciting time as we push forward to explore a new unchartered energy frontier.”

Brookhaven’s role in the LHC is supported by the DOE Office of Science.

Brookhaven National Laboratory is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.

2015-5764 | INT/EXT | Newsroom