Brookhaven Computing Facility Prepares for LHC Data

August 25, 2008

Even though the Large Hadron Collider (LHC) in Switzerland has yet to start up, Brookhaven scientists responsible for handling a chunk of the unprecedented data to be produced within the massive accelerator already have their hands dirty. Working on a daily basis with the Relativistic Heavy Ion Collider (RHIC), the almost 40 staff members at Brookhaven's RHIC and ATLAS Computing Facility (RACF) are no strangers to storing and distributing large amounts of data.

"The benefit of an integrated facility like this is the ability to move highly skilled and experienced IT experts from one project to the other, depending on what's needed at the moment," said RACF Director Michael Ernst. "There are many different flavors of physics computing, but the requirements for these two facilities are very similar to some extent. In both cases, the most important aspect is reliable and efficient storage."

As the sole Tier 1 computing facility in the United States for ATLAS - one of the four experiments at the LHC - Brookhaven provides a large portion of the overall computing resources for U.S. collaborators and serves as the central hub for storing, processing and distributing ATLAS experimental data among scientists across the country. This mission is possible, Ernst said, because of the Lab's ability to build upon and receive support from the Open Science Grid project, a national computational facility that allows researchers to share knowledge, data, and computer processing power in fields ranging from physics to biology.

Yet, even after ramping up to 8 petabytes of accessible online storage - a capacity ten times greater than what existed when ATLAS joined the RACF eight years ago - the computing center's scientists still have plenty of testing and problem-solving to conduct before the LHC begins operations this fall.

"You can't just put up a number of storage and computing boxes, turn them on, and have a stable operation at this scale," Ernst said. "Ramping up so quickly presents a number of issues because what worked yesterday isn't guaranteed to work today."

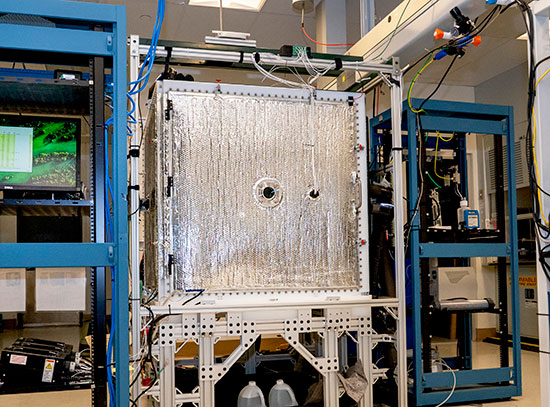

The RHIC and ATLAS Computing Facility.

In order to test their limitations and prepare for real data, the computing staff participates in numerous simulation exercises, spanning tasks from data extraction to actual analyses physicists might perform on their desktops. In a recent throughput test with all of the ATLAS Tier 1 centers, Brookhaven was able to receive data from CERN at a rate of 400 megabytes per second. At that speed, it would take just 20 seconds to upload music to fill an 8-gigabyte iPod.

In the future - as ATLAS and RHIC undergo upgrades to increase luminosity, events become more complex, and data is archived - Brookhaven plans to build a new facility to house, power, and cool the currently used 2,500 machines, which must be replaced with newer models about every three years. This constant maintenance cycle, combined with unexpected challenges from data-taking and data analyses, are sure to keep Brookhaven's Tier 1 center busy for years to come, Ernst said.

"This is all new ground," he said. "You work with people from around the world to find a path that carries you for two years or so. Then, the software and hardware changes and you have to throw everything away and start again. This is extremely difficult, but it's also one of the parts I enjoy most."

A Closer Look at the ATLAS Grid

The ATLAS grid computing system is a large and complicated endeavor that will allow researchers and students around the world to analyze ATLAS data.

The beauty of the grid is that a wealth of computing resources are available for a scientist to accomplish an analysis, even if those resources are not physically available close to them. The data, software, and storage may be located hundreds or thousands of miles away, but the grid makes this invisible to the researcher.

Organizationally, the grid is set up in a tier system, with the Large Hadron Collider located at CERN at Tier 0 -- the very top. Tier 0 will receive the raw data from the ATLAS detector, perform a first pass analysis, and then divide it among five Tier 1 locations, also known as regional centers, including Brookhaven. At the Tier 1 level, which will connect to Tier 0 via the Internet, some of the raw data will be stored, processed, and analyzed. Each Tier 1 facility will then connect to five Tier 2 locations that will provide some data storage, as well as computers and disk space for more in-depth user analysis and simulation. Thus, each tier is basically a computing center consisting of a cluster of computers and storage.

The grid computing infrastructure is made up of several key components. The "fabric" consists of the hardware elements - computing centers, disk storage, tape storage, and networking. The "applications" are the software programs that users would employ, for example, to analyze data. Applications take the raw data from ATLAS and reconstruct it into meaningful information that scientists can interpret. Another type of software, called "middleware," links the fabric elements together so that they form a unified system - the grid. The development of the middleware is a joint effort between physicists and computer scientists.

Outside of high-energy physics, grid computing is used on smaller scales to manage data within other scientific areas such as astronomy, biology, and geology. But the LHC grid is the largest of its kind.

Funding for middleware development is provided by the National Science Foundation's (NSF) Information Technology Research program and by the U.S. Department of Energy (DOE). DOE also funds the Tier 1 center activities, while the Tier 2 centers are funded by the NSF.

2008-856 | INT/EXT | Newsroom