Computation and Data-Driven Discovery (C3D) Projects

Supporting Heterogeneous Task Placement for High-performance Scientific Workflows at Scale

One C3D goal is to improve the efficiency of executing scientific workflows at scale in the pending “exascale era.” Given running scientific workflows is generally organized as concurrent task execution, one important research area is to investigate dynamic task placement at runtime for high-performance computing (HPC) and evaluate the performance at scale in HPC environments (e.g., prevailing heterogeneous architectures). The focus is on intelligent decision online for optimal task placement and execution to improve performance metrics, such as scalability, overhead from enabling tools (RADICAL Cybertools, i.e., RCT), and resource utilization of production runs on leadership-class computing facilities (e.g., the Summit supercomputer at Oak Ridge National Laboratory).

This research is significant to strengthen RCT’s efficiency of supporting distributed computing on various supercomputing platforms when considering different types of heterogeneity for domain-specific scientific workflows. Given that heterogeneous computing serves as the norm in HPC with big data and diverse workloads, intelligent heterogeneous task management will considerably enable HPC scientific computation to reach the forthcoming exascale.

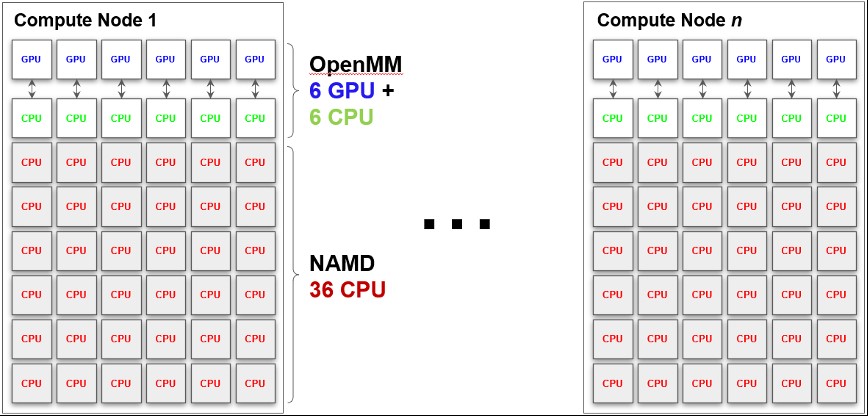

Figure 1. Task placement upon space heterogeneity: ESMACS workflow consists of OpenMM tasks that run on GPUs. TIES workflow uses NAMD tasks that run on CPUs. A hybrid workflow combines ESMACS and TIES, concurrently using the CPUs and GPUs of the same Summit node. This increases node utilization significantly.

Scientific workflows commonly are comprised of heterogeneous workloads that must be run on heterogeneous components of HPC facilities, such as GPUs and CPUs. The ability to enable heterogeneous workloads to be efficiently executed is critical for optimal performance and resource utilization. We have implemented such a capability in RCT, namely, task placement upon space heterogeneity of computing resources. For example, taking two representative COVID-19 drug workflows, ESMACS and TIES, we elaborate how to achieve heterogeneous task placement on nodes with space heterogeneity.

Based on the task launch method, the pilot job manager of RCT, i.e., RADICAL-Pilot (RP), places tasks on specific compute nodes, CPU cores, and GPUs. This placement allows for efficient scheduling of tasks on heterogeneous resources. When scheduling tasks that require different amounts of cores and/or GPUs, RP keeps track of the available slots on each compute node of its pilot. Depending on availability, RP schedules CPU tasks (e.g., Message Passing Interface [MPI]) within and across compute nodes and reserves a CPU core and a GPU for each GPU task. These RP capabilities are used to execute the ESMACS workflow and TIES workflow concurrently on GPUs and CPUs individually, reducing time to execution (TTX) and improving resource utilization at scale. ESMACS and TIES are molecular dynamics (MD)-based protocols to compute binding free energies, and both involve multiple stages of equilibration and MD simulations of protein-ligand complexes. Specifically, ESMACS uses the OpenMM MD engine on GPUs, while TIES uses NAMD code on CPUs. Leveraging aforementioned RP's task placement capabilities upon space heterogeneity of computing resources, we merge these two workflows into an integrated hybrid workflow with heterogeneous tasks that use CPUs and GPUs concurrently.

To illustrate using compute nodes on Summit, Figure 1 details how the heterogeneous tasks in the integrated hybrid workflow are placed in practice. Schematically, OpenMM simulations are tasks placed on GPUs, while NAMD simulations are MPI multi-core tasks on CPUs. Given that one compute node on Summit has six GPUs and 42 CPUs, we can run six OpenMM tasks in parallel that need one GPU and CPU each. For the optimal resource utilization, we assign the remaining 36 CPUs on one node to one NAMD task with 36 MPI ranks. NAMD tasks run concurrently on CPUs with the OpenMM tasks running on GPUs for heterogeneous parallelism. To achieve optimal processor utilization, CPU and GPU computations must overlap as much as possible. We implement the maximum overlapping via concurrent execution of CPU and GPU tasks at runtime and dynamic analysis on task execution time, arranging an appropriate ratio between concurrent CPU and GPU tasks given that the TTX of CPU and GPU tasks differ due to diverse computational complexity.