Computation and Data-Driven Discovery (C3D)

Data-Intensive Research Programs

Mission Statement

CSI’s Computation and Data Driven Discovery (C3D) Division addresses the challenges and requirements of science and engineering applications that demand large-scale and innovative computing solutions.

About C3D

C3D is the CSI gateway for expertise in high-performance workflows, distributed computing technologies, and FAIR (Findability, Accessibility, Interoperability, and Reusability) data. C3D integrates high-performance computing, machine learning, and streaming analytics, and translates them into reproducible science and scientific discovery. C3D conducts research, develops solutions, and provides expertise in workflows, scalable software, and analytics to address the challenges and requirements of science and engineering applications that demand large-scale, innovative solutions. C3D specializes in distributed computing research, software systems that support streaming and real-time analytics, and reproducibility. The team’s work includes performing advanced research into extreme-scale and extensible workflow systems, transparent and reproducible artificial intelligence systems in science, and real-time data analytics with a focus on explainability. Contact any C3D member for additional information and collaboration opportunities.

There are no people to show.

Group Projects

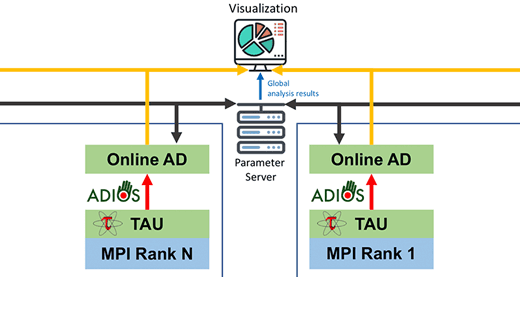

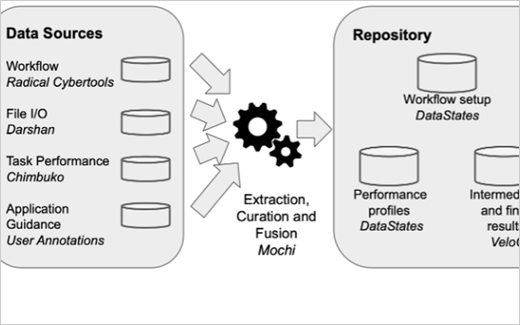

CSI’s role in this project is the development of Chimbuko, the first online performance analysis tool for exascale applications and workflows. The Chimbuko framework captures, analyzes, and visualizes performance metrics for complex scientific workflows and relates these metrics to the context of their execution (provenance) on extreme-scale machines.

ExaWorks brings together a multi-lab team within the DOE HPC software ecosystem to promote integration of existing middleware tools and innovation within their aggregated boundaries. ExaWorks’ Brookhaven Lab team contributes to the Portable Submission Interface for Jobs (PSI/J) and the Software Development Kit (SDK).

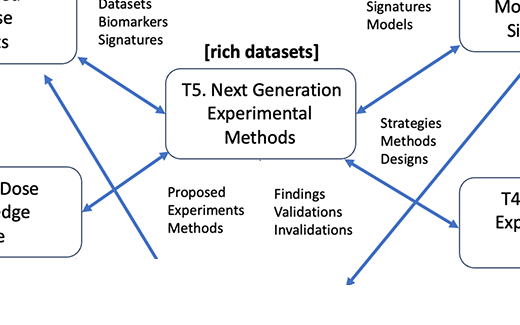

This project is building on advances in artificial intelligence; high-throughput experimental technologies; and multiscale modeling and simulation to advance scientific understanding of the molecular and cellular processes involved in low dose radiation exposure and cancer risk, accelerate discovery, and connect insights across scales.

Understanding the impact of low doses of radiation on biological systems presents an intricate challenge. There are promising signs that this problem is amenable to investigation via artificial intelligence and machine learning (AI/ML). RadBio’s primary aim is to assess the potential of AI/ML in advancing our understanding of low-dose radiation biology.

This project is an important first step in making high-performance computing and AI-enabled workflow applications easily reproducible. Reproducibility, a core tenant of scientific discovery, will support the widespread use of AI applications and workflows at scale in the broader science community, engendering trust in their results.

The Center for Sustaining Workflows and Application Services (SWAS) brings together academia, national laboratories, and industry to create a sustainable software ecosystem supporting the myriad software and services used in workflows, as well as the workflow orchestration software itself.