Machine Learning Group

Real-time Information Distillation on Novel AI Hardware (NPP/CDS)

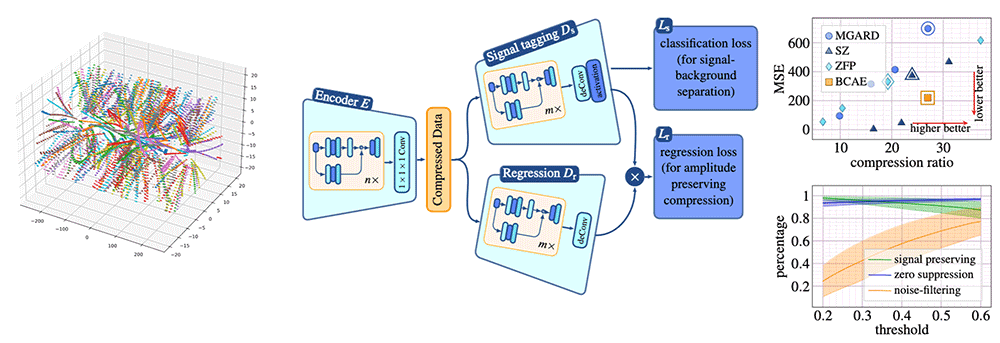

Modern large-scale nuclear physics (NP) experiments in high-energy particle colliders use streaming-readout electronics to acquire data at O(10)~Tbps bandwidth. Prominent examples include the sPHENIX experiment at the Relativistic Heavy Ion Collider (RHIC) and the experiments of the Electron-Ion Collider (EIC) hosted at Brookhaven National Laboratory. One of the main challenges for these streaming-readout systems involves managing the incoming data stream with sufficiently powerful real-time data reduction so the reduced data can fit on persistent storage for later processing and analysis. A typical aim is to reduce the data rate by a factor of O(100), from O(10)~Tbps down to O(100)~Gbps. Traditionally, such reduction is achieved by selectively triggering on specific events and recording a small subset of collisions of interest. Although triggering is applicable to high-energy collider experiments, it is insufficient for the next-generation NP experiments that study diverse collision topologies.

This challenge presents an opportunity and necessity for the use of an artificial intelligence (AI)-directed information distillation algorithm for computational real-time data reduction, including reliable noise filtering, feature extraction, lossy compression, and more. Compared to established data reduction systems using online reconstruction, an AI-directed algorithm has the potential of higher throughput/lower cost, faster development cycles via machine learning, minimal variation of processing latency, and optimization supported by hardware innovators. Despite the distinct signal and background features of different detectors and experiments, various input data streams can be formulated as sparse-encoded three-dimensional (3D) tensors of time frames from the streaming data acquisition (DAQ). These data present a challenge for traditional lossy compression algorithms. This AI algorithm can adapt to the data and achieve better compression ratio and lower error rate. It can also employ GPU and other AI inference hardware and achieve much higher throughput.

Publication

Y. Huang, Y. Ren, S. Yoo and J. Huang, “Efficient Data Compression for 3D Sparse TPC via Bicephalous Convolutional Autoencoder,” 2021 20th IEEE International Conference on Machine Learning and Applications (ICMLA), Pasadena, CA, USA, 2021, pp. 1094-1099, doi: 10.1109/ICMLA52953.2021.00179.

-

-

Yihui (Ray) Ren

Research Staff 5 Computational & AI Codesign Group Lead

(631) 344-4638, yren@bnl.gov

-

Yeonju Go

(631) 344-4516, ygo@bnl.gov

-

-

-