New Calculation Refines Comparison of Matter with Antimatter

Theorists publish improved prediction for the tiny difference in kaon decays observed by experiments

September 17, 2020

A new calculation performed using the world's fastest supercomputers allows scientists to more accurately predict the likelihood of two kaon decay pathways, and compare those predictions with experimental measurements. The comparison tests for tiny differences between matter and antimatter that could, with even more computing power and other refinements, point to physics phenomena not explained by the Standard Model.

UPTON, NY—An international collaboration of theoretical physicists—including scientists from the U.S. Department of Energy’s (DOE) Brookhaven National Laboratory (BNL) and the RIKEN-BNL Research Center (RBRC)—has published a new calculation relevant to the search for an explanation of the predominance of matter over antimatter in our universe. The collaboration, known as RBC-UKQCD, also includes scientists from CERN (the European particle physics laboratory), Columbia University, the University of Connecticut, the University of Edinburgh, the Massachusetts Institute of Technology, the University of Regensburg, and the University of Southampton. They describe their result in a paper to be published in the journal Physical Review D and has been highlighted as an “editor’s suggestion.”

Scientists first observed a slight difference in the behavior of matter and antimatter—known as a violation of “CP symmetry”—while studying the decays of subatomic particles called kaons in a Nobel Prize winning experiment at Brookhaven Lab in 1963. While the Standard Model of particle physics was pieced together soon after that, understanding whether the observed CP violation in kaon decays agreed with the Standard Model has proved elusive due to the complexity of the required calculations.

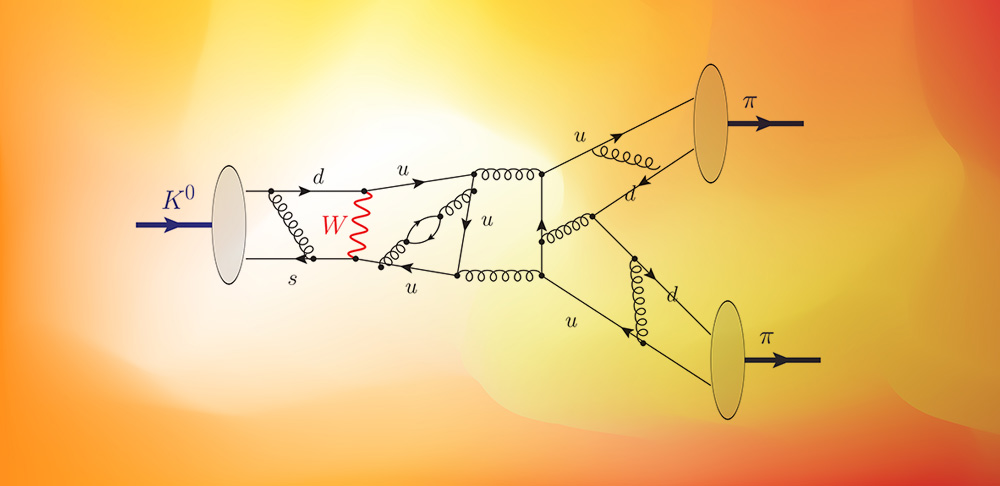

The new calculation gives a more accurate prediction for the likelihood with which kaons decay into a pair of electrically charged pions vs. a pair of neutral pions. Understanding these decays and comparing the prediction with more recent state-of-the-art experimental measurements made at CERN and DOE’s Fermi National Accelerator Laboratory gives scientists a way to test for tiny differences between matter and antimatter, and search for effects that cannot be explained by the Standard Model.

The new calculation represents a significant improvement over the group’s previous result, published in Physical Review Letters in 2015. Based on the Standard Model, it gives a range of values for what is called “direct CP symmetry violation” in kaon decays that is consistent with the experimentally measured results. That means the observed CP violation is now, to the best of our knowledge, explained by the Standard Model, but the uncertainty in the prediction needs to be further improved since there is also an opportunity to reveal any sources of matter/antimatter asymmetry lying beyond the current theory’s description of our world.

“An even more accurate theoretical calculation of the Standard Model may yet lie outside of the experimentally measured range. It is therefore of great importance that we continue our progress, and refine our calculations, so that we can provide an even stronger test of our fundamental understanding,” said Brookhaven Lab theorist Amarjit Soni.

Matter/antimatter imbalance

“The need for a difference between matter and antimatter is built into the modern theory of the cosmos,” said Norman Christ of Columbia University. “Our current understanding is that the present universe was created with nearly equal amounts of matter and antimatter. Except for the tiny effects being studied here, matter and antimatter should be identical in every way, beyond conventional choices such as assigning negative charge to one particle and positive charge to its anti-particle. Some difference in how these two types of particles operate must have tipped the balance to favor matter over antimatter,” he said.

“Any differences in matter and antimatter that have been observed to date are far too weak to explain the predominance of matter found in our current universe,” he continued. “Finding a significant discrepancy between an experimental observation and predictions based on the Standard Model would potentially point the way to new mechanisms of particle interactions that lie beyond our current understanding—and which we hope to find to help to explain this imbalance.”

Modeling quark interactions

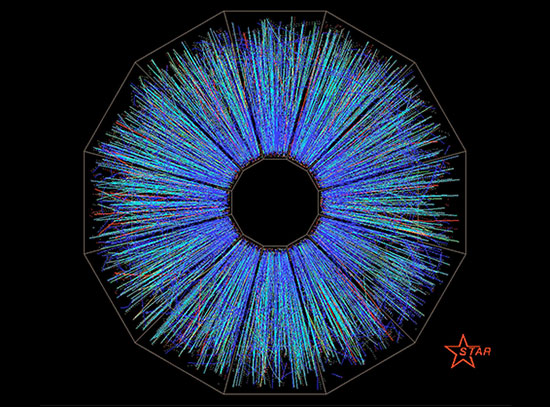

All of the experiments that show a difference between matter and antimatter involve particles made of quarks, the subatomic building blocks that bind through the strong force to form protons, neutrons, and atomic nuclei—and also less-familiar particles like kaons and pions.

“Each kaon and pion is made of a quark and an antiquark, surrounded by a cloud of virtual quark-antiquark pairs, and bound together by force carriers called gluons,” explained Christopher Kelly, of Brookhaven National Laboratory.

The Standard Model-based calculations of how these particles behave must therefore include all the possible interactions of the quarks and gluons, as described by the modern theory of strong interactions, known as quantum chromodynamics (QCD).

In addition, these bound particles move at close to the speed of light. That means the calculations must also include the principles of relativity and quantum theory, which govern such near-light-speed particle interactions.

“Because of the huge number of variables involved, these are some of the most complicated calculations in all of physics,” noted Tianle Wang, of Columbia University.

Computational challenge

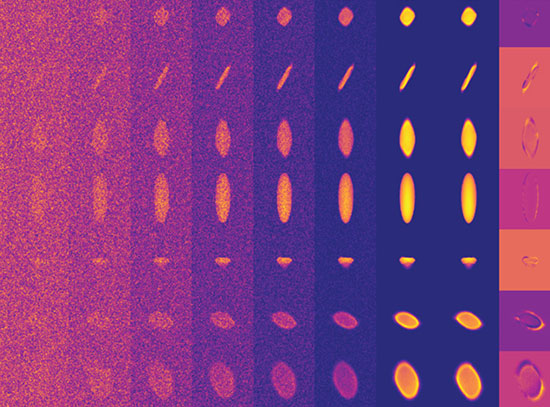

To conquer the challenge, the theorists used a computing approach called lattice QCD, which “places” the particles on a four-dimensional space-time lattice (three spatial dimensions plus time). This box-like lattice allows them to map out all the possible quantum paths for the initial kaon to decay to the final two pions. The result becomes more accurate as the number of lattice points increases. Wang noted that the “Feynman integral” for the calculation reported here involved integrating 67 million variables!

enlarge

enlarge

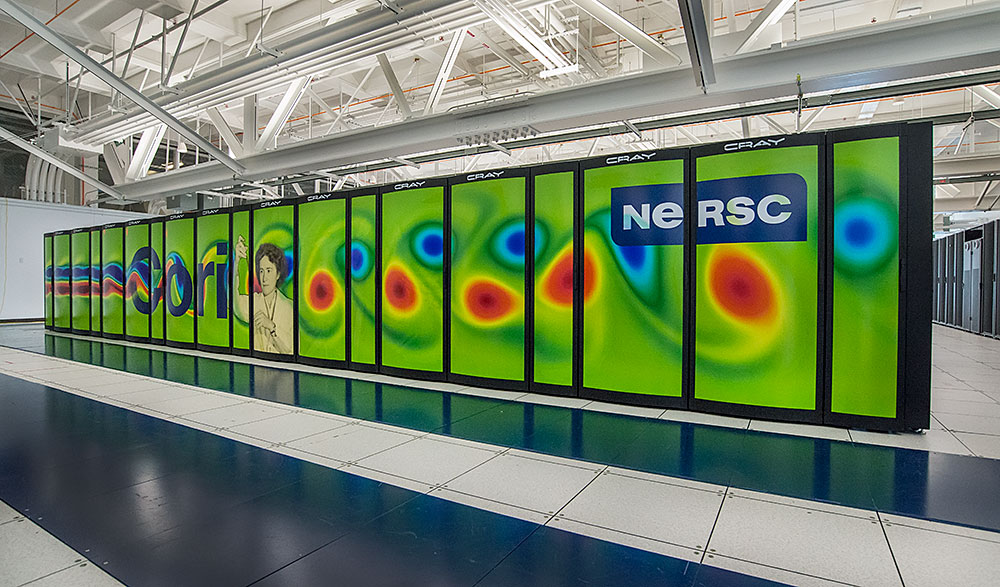

The Cori supercomputer at the National Energy Research Scientific Computing Center (NERSC), a DOE Office of Science user facility at DOE's Lawrence Berkeley National Laboratory. Credit: NERSC, Lawrence Berkeley National Laboratory

These complex calculations were done by using cutting-edge supercomputers. The first part of the work, generating samples or snapshots of the most likely quark and gluon fields, was performed on supercomputers located in the US, Japan, and the UK. The second and most complex step of extracting the actual kaon decay amplitudes was performed at the National Energy Research Scientific Computing Center (NERSC), a DOE Office of Science user facility at DOE’s Lawrence Berkeley National Laboratory.

But using the fastest computers is not enough; these calculations are still only possible even on these computers when using highly optimized computer codes, developed for the calculation by the authors.

“The precision of our results cannot be increased significantly by simply performing more calculations,” Kelly said. “Instead, in order to tighten our test of the Standard Model we must now overcome a number of more fundamental theoretical challenges. Our collaboration has already made significant strides in resolving these issues and coupled with improvements in computational techniques and the power of near-future DOE supercomputers, we expect to achieve much improved results within the next three to five years.”

The authors of this paper are, in alphabetical order: Ryan Abbott (Columbia), Thomas Blum (UConn), Peter Boyle (BNL & U of Edinburgh), Mattia Bruno (CERN), Norman Christ (Columbia), Daniel Hoying (UConn), Chulwoo Jung (BNL), Christopher Kelly (BNL), Christoph Lehner (BNL & U of Regensburg), Robert Mawhinney (Columbia), David Murphy (MIT), Christopher Sachrajda (U o Southampton), Amarjit Soni (BNL), Masaaki Tomii (UConn), and Tianle Wang (Columbia).

The majority of the measurements and analysis for this work were performed using the Cori supercomputer at NERSC, with additional contributions from the Hokusai machine at the Advanced Center for Computing and Communication at Japan’s RIKEN Laboratory and the IBM BlueGene/Q (BG/Q) installation at Brookhaven Lab (supported by the RIKEN BNL Research Center and Brookhaven Lab’s prime operating contract from DOE’s Office of Science). Additional supercomputing resources used to develop the lattice configurations included: the BG/Q installation at Brookhaven Lab, the Mira supercomputer at the Argonne Leadership Class Computing Facility (ALCF) at Argonne National Laboratory, Japan’s KEKSC 1540 computer, the UK Science and Technology Facilities Council DiRAC machine at the University of Edinburgh, and the National Center for Supercomputing Applications Blue Waters machine at the University of Illinois (funded by the U.S. National Science Foundation). NERSC and ALCF are DOE Office of Science user facilities. Individual researchers received support from various grants issued by the DOE Office of Science and other sources in the U.S. and abroad.

Brookhaven National Laboratory is supported by the U.S. Department of Energy’s Office of Science. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit https://www.energy.gov/science/

Follow @BrookhavenLab on Twitter or find us on Facebook

2020-17325 | INT/EXT | Newsroom