Plotting the Future for Computing in High-Energy and Nuclear Physics

Hundreds of physicists and computation experts convene to discuss how to expand the limits of data collection and analysis

June 1, 2012

Special thanks to the members of the local organizing committee who made CHEP 2012 possible: Maureen Anderson, Mariette Faulkner, John DeStefano, Ognian Novakov, Ofer Rind (co-chair), and Tony Wong (co-chair), all from Brookhaven Lab; Kyle Cranmer and his graduate students at NYU; and Connie Potter of CERN. Thanks also to Krista Reimer of BlueNest Events, the operations staffs at NYU's Kimmel Center and Skirball Center, and Tallen Technologies for videotaping and poster board display services.

More than 500 physicists and computational scientists from around the globe, including many working at the world’s largest and most complex particle accelerators, met in New York City May 21-25 to discuss the development of the computational tools essential to the future of high-energy and nuclear physics. The 19th International Conference on Computing in High Energy and Nuclear Physics (CHEP) was hosted by the U.S. Department of Energy’s (DOE) Brookhaven National Laboratory and New York University — leaders in expanding the frontiers of data-intensive scientific research and computational analysis.

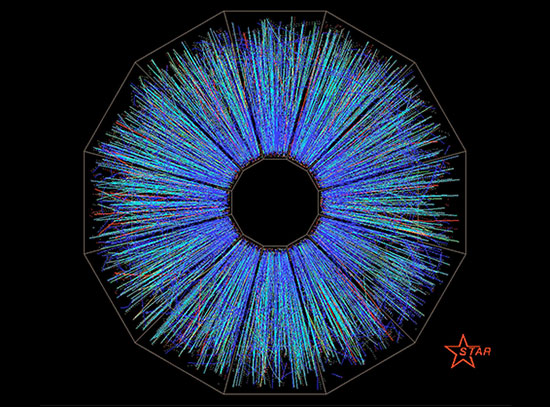

The conference was organized by scientists from Brookhaven Lab’s RHIC and ATLAS Computing Facility (RACF), which provides computing services for Brookhaven’s Relativistic Heavy Ion Collider (RHIC) and the U.S.-based collaborators in the ATLAS experiment at Europe’s Large Hadron Collider (LHC) — particle accelerators that recreate conditions of the early universe in billions of subatomic particle collisions to explore the fundamental forces and properties of matter — as well as the collaborators in the Large Synoptic Survey Telescope (LSST) project. A central theme of the meeting was how to keep up with ever-increasing needs for data processing and analysis from such complex experiments in a cost-effective, efficient manner.

“This year’s conference, focusing on reviewing experiences gained with current computing technologies and, based on the latter, working on evolutionary or even revolutionary steps to take computing in High Energy and Nuclear Physics to the next level, offers us the opportunity to explore exciting new terrain. ” said RACF director Michael Ernst in his opening remarks. “Let me invite you to approach this meeting with Columbus’ ‘spirit of discovery’ in mind, taking advantage of the many ways in which you too might explore the unfamiliar – and discover a great deal in the process.”

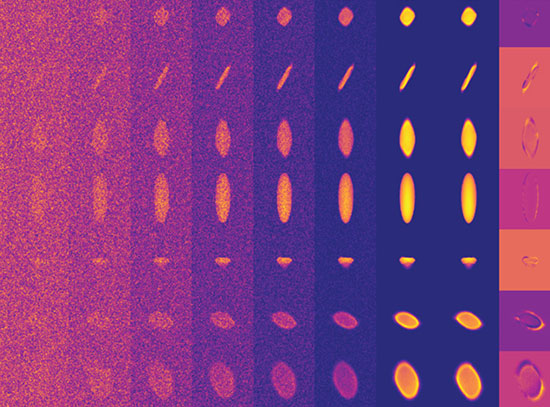

Through an interactive program consisting of presentations, workshops, and poster sessions, participants were encouraged to share experiences with data processing at all stages — from how computers closest to complex experiments such as those at RHIC and the LHC “know” which of the billions of events are important to record and analyze, through to the final analyses of petabytes of data that use computing resources distributed worldwide — and explore ways they might collaborate or adapt approaches to push beyond current limits and keep costs down.

The view from DOE

“We live in interesting times, and they are getting more interesting,” said Glen Crawford, director of the Research and Technology Division of the Office of High Energy Physics (HEP) within the DOE Office of Science, referring both to the plethora of exciting physics findings emerging from all three frontiers of high-energy physics (energy, intensity, and cosmic) and the need to adapt the DOE strategic plan to respond to recent budgetary developments. He highlighted the essential role that DOE-supported computing resources play in advancing discoveries across frontiers, including how computational simulations are both guiding and validating experimental approaches, with benefits that extend well beyond the physics community to fields such as medicine, aerospace, and global communications.

In writing the next chapter of discovery, he emphasized the need for more commonality and community planning to avoid “reinventing the wheel.” The physics community, he said, “needs to own the science case and sell the science case” for the experiments they would like to do next. That’s not to say scientists shouldn’t take risks. “Risk taking and results are not mutually exclusive,” he said. “Part of maintaining leadership is to push for developments. Push back on what’s possible today to make something new possible tomorrow.”

Meeting the computing challenges

As if answering that call, the range of speakers that followed in plenary and breakout sessions presented the current status of research, the computing challenges they face, and a range of strategies to address them.

Joe Incandela, professor of physics at the University of California, Santa Barbara, and spokesperson for the CMS collaboration at the Large Hadron Collider, gave highlights from the LHC, including the search for the Higgs particle and prospects for 2012. “Computing is the final step in a long journey to realizing the full physics potential of the LHC,” Incandela said.

Various speakers from the physics community and the computing industry presented approaches to maximize data crunching capabilities by integrating the most recent hardware technology with many processing execution units — many-core processors and graphics processing units (GPUs) — into the data analysis process. Among the topics that received the most discussion were improvements to the experiments’ computing models in view of abundant network capacities connecting sites around the world. New services are becoming available that will transform distributed computing by featuring easy and flexible deployment and transparent access to an integrated and powerful system to meet the demands of data-intensive science in a broad scope of research disciplines.

Networking for the future

At the Monday evening reception, Seth W. Pinsky, president of the New York City Economic Development Corporation (NYCEDC), enthusiastically welcomed the computing group to a city he hopes will play a major role in technological advances of the 21st Century. He described a range of initiatives aimed at expanding the city’s economy and positioning New York as an international center for innovation, including the recent announcement to establish a graduate scientific research center on Roosevelt Island in partnership with Cornell University and Technion-IIT.

“Cities that are leaders in the innovation economy in the 21st century will be leaders in technology, have significant creative sectors, and will have a vibrant business community with a dynamic mix of world leading big companies and pioneering start-ups,” he said. Attracting renowned research institutions such as Brookhaven Lab and NYU to convene high-tech meetings such as CHEP in NYC, and establishing a graduate center as a core of applied sciences activity right in the heart of the city, he said, positions New York as one of those forefront leaders in innovation and advancement fueled by science.

As Michael Ernst concluded, “The meeting was a great opportunity for real-world networking among the people who have built a computing ecosystem that innovates via its diversity.” There are countless technological as well as operational examples for this innovation, he said — innovation that starts in a small university group, or by a fringe community, and is disseminated across the entire ecosystem due to the relationships among various partners, and over time becomes the new default based on the merit of the initial idea, implementation, hardening, and ultimately ease of use. “This system of evolutionary development is a solid foundation for future scientific discoveries at Brookhaven, the LHC, and beyond.”

2012-3098 | INT/EXT | Newsroom