Mega-Bucks from Russia Seed Development of "Big Data" Tools

Brookhaven physicist awarded Russian "mega-grant" to develop software and data management tools for science

February 20, 2014

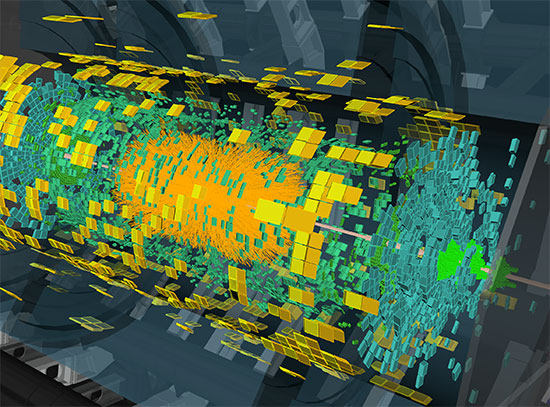

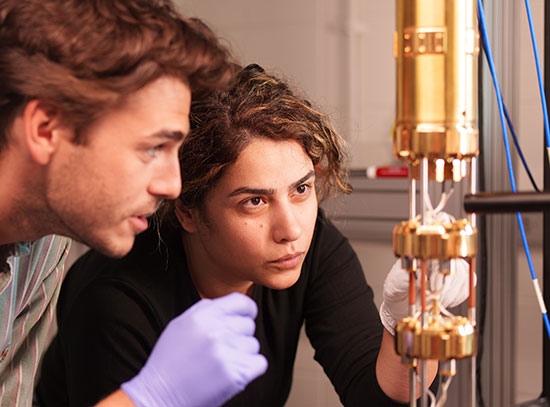

UPTON, NY—The Russian Ministry of Education and Science has awarded a $3.4 million "mega-grant" to Alexei Klimentov, Physics Applications Software Group Leader at the U.S. Department of Energy's Brookhaven National Laboratory, to develop new "big data" computing tools for the advancement of science. The project builds on the success of a workload and data management system built by Klimentov and collaborators to process huge volumes of data from the ATLAS experiment at Europe's Large Hadron Collider (LHC), where the famed Higgs boson—the source of mass for fundamental particles—was discovered. Brookhaven is the lead U.S. laboratory for the ATLAS experiment, and hosts the Tier 1 computing center for data processing, storage and archiving.

"The increasing capabilities to collect, process, analyze, and extract knowledge from large datasets are pushing the boundaries of many areas of modern science and technology," Klimentov said. "This grant recognizes how the computing tools we developed to explore the mysteries of fundamental particles like the Higgs boson can find widespread application in many other fields in and beyond physics. For example, research in nuclear physics, astrophysics, molecular biology, and sociology generates extremely large volumes of data that needs to be accessed by collaborators around the world. Sophisticated computing software can greatly enhance progress in these fields by managing the distribution and processing of such data."

The project will be carried out at Russia's National Research Center Kurchatov Institute (NRC-KI) in Moscow, the lead Russian organization involved in research at the LHC, in collaboration with scientists from ATLAS, other LHC experiments, and other data-intensive research projects in Europe and the U.S. It will make use of computational infrastructure provided by NRC-KI to develop, code, and implement software for a novel "big data" management system that has no current analog in science or industry.

Computing tools for science

"The increasing capabilities to collect, process, analyze, and extract knowledge from large datasets are pushing the boundaries of many areas of modern science and technology."

— Brookhaven scientist Alexei Klimentov

Though nothing of this scope currently exists, the new tools will be complementary with a system developed by Brookhaven physicist Torre Wenaus and University of Texas at Arlington physicist Kaushik De for processing ATLAS data. That system, called PanDA (for Production and Distributed Analysis), is used by thousands of physicists around the world in the LHC's ATLAS collaboration.

PanDA links the computing hardware associated with ATLAS—located at 130 computing centers around the world that manage more than 140 petabytes, or 140 million gigabytes, of data—allowing scientists to efficiently analyze the tens of millions of particle collisions taking place at the LHC each day the collider is running. "That data volume is comparable to Google's entire archive on the World Wide Web," Klimentov said.

Expanding access

In September 2012, the U.S. Department of Energy's Office of Science awarded $1.7 million to Klimentov/Brookhaven and UT Arlington to develop a version to expand access to scientists in fields beyond high-energy physics and the Worldwide LHC Computing Grid.

For the DOE-sponsored effort, Klimentov's team is working together with physicists from Argonne National Laboratory, UT Arlington, University of Tennessee at Knoxville, and the Oak Ridge Leadership Computing Facility (OLCF) at Oak Ridge National Laboratory. Part of the team has already set up and tailored PanDA software at the OLCF, pioneering a connection of OLCF supercomputers to ATLAS and the LHC Grid facilities.

"We are now exploring how PanDA might be used for managing computing jobs that run on OLCF's Titan supercomputer to make highly efficient use of Titan's enormous capacity. Using PanDA's ability to intelligently adapt jobs to the resources available could conceivably 'generate' 300 million hours of supercomputing time for PanDA users in 2014 and 2015," he said.

The main idea is to reuse, as much as possible, existing components of the PanDA system that are already deployed on the LHC Grid for analysis of physics data. "Clearly, the architecture of a specific computing platform will affect incorporation into the system, but it is beneficial to preserve most of the current system components and logic," Klimentov said. In one specific instance, PanDA was installed on Amazon's Elastic Compute Cloud, a web-based computing service, and is being used to give Large Synoptic Survey Telescope scientists access to computing resources at Brookhaven Lab and later across the country.

The new system being developed for LHC and nuclear physics experiments, called megaPanDA, will be complementary with PanDA. While PanDA handles data processing the new system will add support for large-scale data handling.

"The challenges posed by 'big data' are not limited by the size of the scientific data sets," Klimentov said. "Data storage and data management certainly pose serious technical and logistical problems. Arguably, data access poses an equal challenge. Requirements for rapid, near real-time data processing and rapid analysis cycles at globally distributed, heterogeneous data centers place a premium on the efficient use of available computational resources. Our new workload and data management system, mega-PanDA, will efficiently handle both the distribution and processing of data to help address these challenges."

PanDA was created with funding from the Department of Energy's Office of Science and the National Science Foundation.

DOE's Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.

2014-11612 | INT/EXT | Newsroom