Department of Energy Announces $21.4 Million for Quantum Information Science Research

Projects linked to both particle physics and fusion energy

October 1, 2019

The following news release was issued on Aug. 26, 2019 by the U.S. Department of Energy (DOE). It announces funding that DOE has awarded for research in quantum information science related to particle physics and fusion energy sciences. Scientists at DOE’s Brookhaven National Laboratory are principal investigators on two of the 21 funded projects.

WASHINGTON, D.C.—Today, the U.S. Department of Energy (DOE) announced $21.4 million in funding for research in Quantum Information Science (QIS) related to both particle physics and fusion energy sciences.

“QIS holds great promise for tackling challenging questions in a wide range of disciplines,” said Under Secretary for Science Paul Dabbar. “This research will open up important new avenues of investigation in areas like artificial intelligence while helping keep American science on the cutting edge of the growing field of QIS.”

Funding of $12 million will be provided for 21 projects of two to three years’ duration in particle physics. Efforts will range from the development of highly sensitive quantum sensors for the detection of rare particles, to the use of quantum computing to analyze particle physics data, to quantum simulation experiments connecting the cosmos to quantum systems.

Funding of $9.4 million will be provided for six projects of up to three years in duration in fusion energy sciences. Research will examine the application of quantum computing to fusion and plasma science, the use of plasma science techniques for quantum sensing, and the quantum behavior of matter under high-energy-density conditions, among other topics.

Fiscal Year 2019 funding for the two initiatives totals $18.4 million, with out-year funding for the three-year particle physics projects contingent on congressional appropriations.

Projects were selected by competitive peer review under two separate Funding Opportunity Announcements (and corresponding announcements for DOE laboratories) sponsored respectively by the Office of High Energy Physics and the Office of Fusion Energy Sciences with the Department’s Office of Science.

A list of particle physics projects can be found here and fusion energy sciences projects here, both under the heading “What’s New.”

Brookhaven Lab projects

Brookhaven Lab is leading two projects that were awarded funding:

Quantum Convolutional Neural Networks for High-Energy Physics Data Analysis

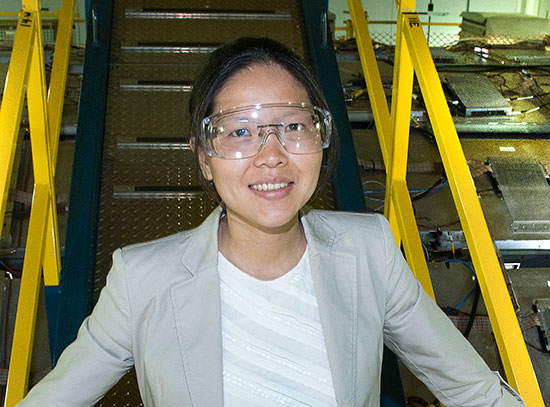

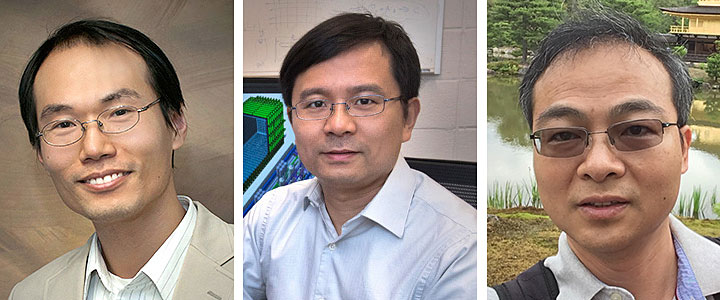

(From left to right) Brookhaven computational scientist Shinjae Yoo (principal investigator), Brookhaven physicist Chao Zhang, and Stony Brook University quantum information theorist Tzu-Chieh Wei are developing deep learning techniques to efficiently handle sparse data using quantum computer architectures. Data sparsity is common in high-energy physics experiments.

Over the past few decades, the scale of high-energy physics (HEP) experiments and size of data they produce have grown significantly. For example, in 2017, the data archive of the Large Hadron Collider (LHC) at CERN in Europe—the particle collider where the Higgs boson was discovered—surpassed 200 petabytes. For perspective, consider Netflix streaming: a 4K movie stream uses about seven gigabytes per hour, so 200 petabytes would be equivalent to 3,000 years of 4K streaming. Data generated by future detectors and experiments such as the High-Luminosity LHC, the Deep Underground Neutrino Experiment (DUNE), Belle II, and the Large Synoptic Survey Telescope (LSST) will move into the exabyte range (an exabyte is 1,000 times larger than a petabyte).

These large data volumes present significant computing challenges for simulating particle collisions, transforming raw data into physical quantities such as particle position, momentum, and energy (a process called event reconstruction), and performing data analysis. As detectors become more sensitive, simulation capabilities improve, and data volumes increase by orders of magnitude, the need for scalable data analytics solutions will only increase.

A viable solution could be QIS. Quantum computers and algorithms have the capability to solve problems exponentially faster than classically possible. The Quantum Convolutional Neural Networks (CNNs) for HEP Data Analysis project will exploit this “quantum advantage” to develop machine learning techniques for handling data-intensive HEP applications. Neural networks refer to a class of deep learning algorithms that are loosely modelled on the architecture of neuron connections in the human brain. One type of neural network is the CNN, which is most commonly used for computer vision tasks, such as facial recognition. CNNs are typically composed of three types of layers: convolution layers (convolution is a linear mathematical operation) that extract meaningful features from an image, pooling layers that reduce the number of parameters and computations, and fully connected layers that classify the extracted features into a label.

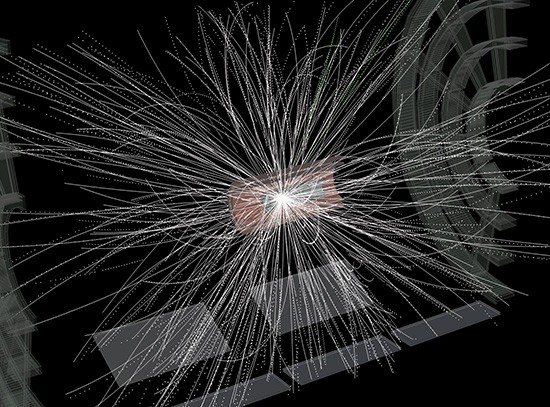

Neutrino interaction events are characterized by extremely sparse data, as can be seen in the above 3-D image reconstruction from 2-D measurements.

In this case, the scientists on the project will develop a quantum-accelerated CNN algorithm and quantum memory optimized to handle extremely sparse data. Data sparsity is common in HEP experiments, for which there is a low probability of producing exotic and interesting signals; thus, rare events must be extracted from a much larger amount of data. For example, even though the size of the data from one DUNE event could be on the order of gigabytes, the signals represent one percent or less of those data. They will demonstrate the algorithm on DUNE data challenges, such as classifying images of neutrino interactions and fitting particle trajectories. Because the DUNE particle detectors are currently under construction and will not become operational until the mid-2020s, simulated data will be used initially.

“Customizing a CNN to work efficiently on sparse data with a quantum computer architecture will not only benefit DUNE but also other HEP experiments,” said principal investigator Shinjae Yoo, a computational scientist in the Computer Science and Mathematics Department of Brookhaven Lab’s Computational Science Initiative (CSI).

enlarge

enlarge

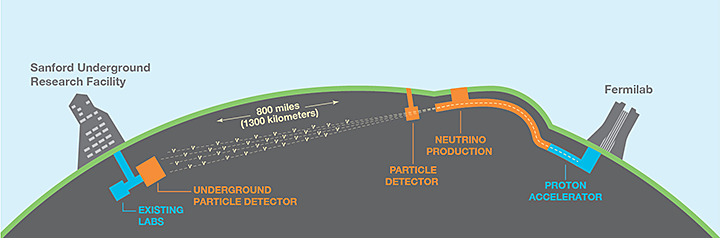

DUNE is an international experiment to transform our understanding of neutrinos—the most abundant matter particles in the universe. There will be two neutrino detectors: one to record particle interactions near the source of the beam at Fermilab in Illinois, and a second larger detector installed more than a kilometer underground at the Sanford Underground Research Laboratory in South Dakota (1,300 kilometers downstream of the source). These detectors will enable scientists to research several fundamental questions about the nature of matter and evolution of the universe. Credit: dunescience.org.

The co-investigator is Brookhaven physicist Chao Zhang. Yoo and Zhang will collaborate with quantum information theorist Tzu-Chieh Wei, an associate professor at Stony Brook University’s C.N. Yang Institute for Theoretical Physics.

Quantum Astrometry

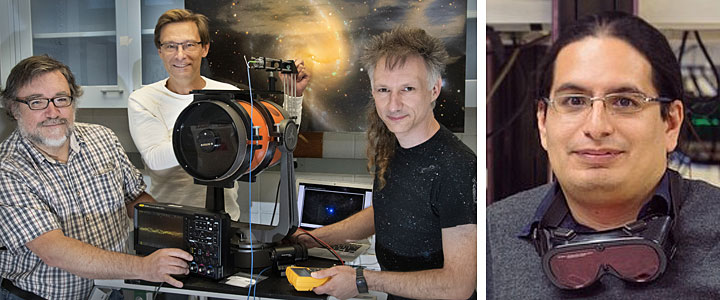

(Left photo, left to right) Brookhaven Lab physicists Paul Stankus, Andrei Nomerotski (principal investigator), Sven Herrmann, and (right photo) Eden Figueroa (a Stony Brook University joint appointee) are developing a new quantum technique that will enable more precise measurements for studies in astrophysics and cosmology. They will use a fiber-coupled telescope with adaptive optics (seen in left photo) for the proof-of-principle measurements.

The resolution of any optical telescope is fundamentally limited by the size of the aperture, or the opening through which particles of light (photons) are collected, even after the effects of atmospheric turbulence and other fluctuations have been corrected for. Optical interferometry—a technique in which light from multiple telescopes is combined to synthesize a large aperture between them—can improve resolution. Though interferometers can provide the clearest images of very small astronomical objects such as distant galaxies, stars, and planetary systems, the instruments’ intertelescope connections are necessarily complex. This complexity limits the maximum separation distance (“baseline”)—and hence the ultimate resolution.

An alternative approach to overcoming the aperture resolution limit is to quantum mechanically interfere star photons with distributed entangled photons at separated observing locations. This approach exploits the phenomenon of quantum entanglement, which occurs when two particles such as photons are “linked.” Though these pairs are not physically connected, measurements involving them remain correlated regardless of the distance between them.

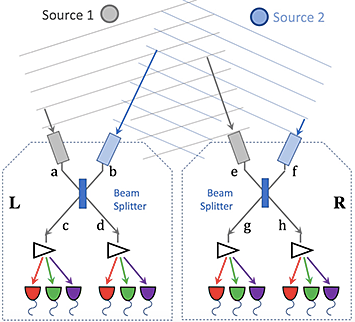

Schematic of two-photon interferometry. If the two photons are close enough together in time and frequency, the pattern of coincidences between measurements at detectors c and d in L and detectors g and h in R will be sensitive to the phase differences. The phase differences from each source can be related to their angular position in the sky.

The Quantum Astrometry project seeks to exploit this phenomenon to develop a new quantum technique for high-resolution astrometry—the science of measuring the positions, motions, and magnitudes of celestial objects—based on two-photon interferometry. In traditional optical interferometry, the optical path for the photons from the telescopes must be kept highly stable, so the baseline for today’s interferometers is about 100 meters. At this baseline, the resolution is sufficient to directly see exoplanets or track stars orbiting the supermassive black hole in the center of the Milky Way. One goal of quantum astrometry is to reduce the demands for intertelescope links, thereby enabling longer baselines and higher resolutions.

Pushing the resolution even further would allow more precise astrometric measurements for studies in astrophysics and cosmology. For example, black hole accretion discs—flat astronomical structures made up of a rapidly rotating gas that slowly spirals inward—could be directly imaged to test theories of gravity. An orders-of-magnitude higher resolution would also enable scientists to refine measurements of the expansion rate of the universe, map gravitational microlensing events (temporary brightening of distant objects when light is bent by another object passing through our line of sight) to probe the nature of dark matter (a type of “invisible” matter thought to make up most of the universe’s mass), and measure the 3-D “peculiar” velocities of stars (their individual motion with respect to that of other stars) across the galaxy to determine the forces acting on all stars.

In classical interferometry, photons from an astronomical source strike two telescopes with some relative delay (phase difference), which can be determined through interference of their intensities. Using two photons in the form of entangled pairs that can transmit simultaneously to both stations and interfere with the star photons would allow arbitrarily long baselines and much finer resolution on this relative phase difference and hence on astrometry.

“This is a very exploratory project where for the first time we will test ideas of two-photon optical interferometry using quantum entanglement for astronomical observations,” said principal investigator Andrei Nomerotski, a physicist in the Lab’s Cosmology and Astrophysics Group. “We will start with simple proof-of-principle experiments in the lab, and in two years, we hope to have a demonstrator with real sky observations.

“It’s an example of how quantum techniques can open new ranges for scientific sensors and detectors,” added Paul Stankus, a physicist in Brookhaven’s Instrumentation Division who is working on QIS.

The other team members are Brookhaven physicist Sven Herrmann, a collaborator on several astrophysics projects, including LSST, and Brookhaven–Stony Brook University joint appointee Eden Figueroa, a leading figure in quantum communication technology.

Brookhaven National Laboratory is supported by the U.S. Department of Energy’s Office of Science. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.

Follow @BrookhavenLab on Twitter or find us on Facebook.

2019-16760 | INT/EXT | Newsroom