Developing Innovative AI Tools for Nanoscience with Shray Mathur

interview with a CFN Scientific Associate

June 20, 2025

Shray Mathur (Kevin Coughlin/Brookhaven National Laboratory)

Shray Mathur is a scientific associate at the Center for Functional Nanomaterials (CFN), where he studies artificial intelligence (AI) and machine learning (ML) models that can be applied to nanoscience research. Among those projects is VISION, the first voice-controlled AI assistant for synchrotron X-ray nanomaterials experiments at Brookhaven’s National Synchrotron Light Source II (NSLS-II). Both CFN and NSLS-II are U.S. Department of Energy (DOE) user facilities located at DOE’s Brookhaven National Laboratory.

What is your background, and what led you to join CFN?

I received my undergraduate degree in computer science in India, then I went on to complete a master’s degree from the Electrical and Computer Engineering Department at the University of Texas at Austin. Working with Professor Joydeep Ghosh, I was part of a particular track that focused on applied AI and ML. I was using those skills to study how AI and ML can be applied to research in climate science by developing interpretable generative models for precipitation forecasting.

I graduated from UT Austin in the summer of 2024 and joined Brookhaven right after, in July of 2024. My graduate work was in a different scientific domain than what I am doing now at CFN, but those skills have been easy to carry over.

What drew you to CFN and Brookhaven Lab?

I have always been interested in working on applied machine learning for science and building real-world systems that can actually be deployed to researchers or labs. When I heard about the VISION project from CFN researcher Esther Tsai, who is one of the leaders of that work, that was definitely very exciting to me. I loved the idea that I could help develop an AI-driven system that would be used by researchers in a scientific facility. It was a huge motivator for me to come to CFN, as was the idea of working on a bigger project like the science exocortex.

What is your role in the VISION project?

VISION — the first voice-controlled AI interface for X-ray scattering experiments — is the first project I joined when I arrived at CFN. At that time, it was a work in progress: an early-stage prototype with limited capabilities, relying on older models and only able to recognize a small set of text-based instructions. Since then, I’ve helped the VISION team significantly expand its functionality. It now supports audio input and incorporates state-of-the-art large language models (LLMs) to handle a much broader and more complex range of user inputs, making it significantly more powerful and versatile.

We have been working to improve it for the last several months, and now it is a functioning AI assistant designed to facilitate natural language interaction between researchers and complex scientific instrumentation, although we are currently focused on the instruments found at synchrotron beamlines. VISION is currently launched and is being tested at the NSLS-II Complex Materials Scattering (CMS) beamline.

As an AI assistant, VISION can handle a range of tasks. The idea is that a user simply has to tell VISION in plain language what they’d like to do at an instrument and the AI companion, tailored to that instrument, will take on the task. For example, users can tell VISION to run an experiment, launch data analysis, take notes during an experiment, and engage other software protocols, all through a single user interface. A user will give a natural language instruction, and VISION will know which model to call in the background.

We are working to expand its utility at CMS and make it available at other NSLS-II beamlines, such as Soft Matter Interfaces (SMI). One of VISION’s architectural features is that it’s modular, so it is easy to extend or adapt it to different beamlines and potentially even different synchrotrons. We’re also working on making VISION more conversational and more reliable.

We’ve recently published some of this work in Machine Learning Science and Technology.

What other developments in AI are you involved with at CFN?

We have been working on a nanoscience chatbot, which we’ve also incorporated into VISION. The chatbot allows users to search through published research, ask questions about the beamline, and access relevant scientific information. We’re working to make it more conversational — not just a question-answering tool, but a brainstorming partner that understands past research, knows about the beamline setup, and can engage in open-ended dialogue. While it’s grounded in scientific knowledge, it can also handle general queries, much like ChatGPT and other widely used chatbots.

Beyond knowledge discovery through chatbots, we’re exploring how large language models (LLMs) can contribute to the upstream stages of scientific research — specifically hypothesis generation. As part of this effort, I’m working on evaluating the scientific creativity of LLMs. We’re investigating whether the scientific creativity of an LLM — its ability to “think” about novel ways to attack a scientific problem — scales with its general creativity. As models become bigger and more powerful, you’d expect that their creativity, both generally and scientifically, to be correlated with their size. We’re trying to see if that’s true by presenting the models with a set of open-ended scientific questions from physics, chemistry, biology, nanoscience, and other domains, and analyzing their ability to generate unique and meaningful responses.

Already, we’re starting to learn that the scientific creativity of an LLM doesn’t necessarily scale with its size and power. When you focus down to specific categories and look at specific questions, it’s not always true that the most powerful model will give you the most creative responses. These insights are essential as we move toward building systems for autonomous scientific ideation — agents that can brainstorm new hypotheses, suggest experiments, and drive discovery. This project is ongoing, but we’re already seeing some interesting results.

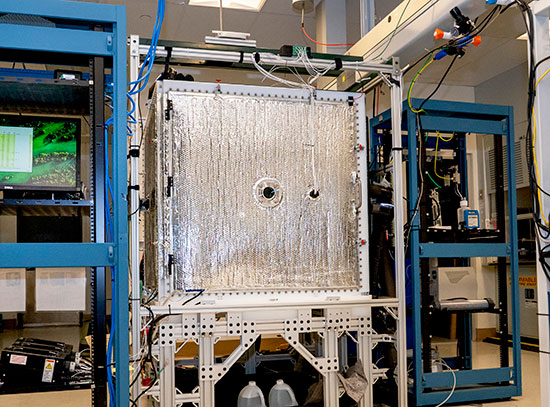

Finally, we have another project in progress, which is to make a digital twin for beamlines — a simulator that allows researchers to virtually test VISION-generated code before running actual experiments. This simulator can predict outcomes based on input code, helping us move from code-to-code comparisons to more meaningful evaluations at the level of beamline events. It also acts as a safeguard by flagging potential errors ahead of time, which is especially useful when samples are limited or beamline time is constrained.

Together, these components — autonomous experimentation via VISION, knowledge retrieval via the nanoscience chatbot, autonomous ideation via LLMs, and reliable evaluations via the digital twin — are part of a larger vision of a scientific exocortex. The novel concept is to develop a swarm of AI agents, each focused on a different aspect of the scientific process, communicating and collaborating with each other to help scientists accelerate their research.

You’re also doing some additional work on chatbots. Tell us more about that.

I’m working on some general-purpose science chatbots, which I think could be useful to many researchers at CFN. Building a simple chatbot actually becomes very, very tricky when you are talking about incorporating so many documents, such as journal papers and other literature in this case. And you want the answers to be both scientifically sound and technically sufficient enough to be helpful.

What are some things you enjoy doing when you aren’t working?

My main hobby is singing and playing the guitar. Music is an important part of my life outside of work, although I have done a little bit of that at CFN. I played during last summer’s annual CFN barbeque, which was a lot of fun.

Brookhaven National Laboratory is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit science.energy.gov.

Follow @BrookhavenLab on social media. Find us on Instagram, LinkedIn, X, and Facebook.

2025-22510 | INT/EXT | Newsroom