Brookhaven Presents Big Data Pilot Projects at Supercomputing Conference

March 18, 2015

As the precision, energy, and output of scientific instruments such as particle colliders, light sources, and microscopes increases, so does the volume and complexity of data generated. Scientists confronting this high-tech version of “information overload” are faced with choices about which data to capture, share, and analyze to extract the pieces essential to new discoveries. High-performance computing (HPC) architectures have played an essential role in sorting the wheat from the chaff, with new strategies evolving to make optimal use of these resources and accelerate the pace of discovery in the world of ever-increasing data demands.

As part of a U.S. Department of Energy (DOE) effort to showcase new data-handling strategies, scientists from DOE’s Brookhaven National Laboratory demonstrated two pilot projects for modeling and processing large-volume data sets at the SC14 (Supercomputing 2014) conference held in New Orleans last November. The first project describes an effort to trickle small “grains” of data generated by the ATLAS experiment at the Large Hadron Collider (LHC) in Europe into small pockets of unused computing time, sandwiched between big jobs on high-performance supercomputers; the second illustrates how advances in computing and applied mathematics can improve the predictive value of models used to design new materials.

Nanostructure Complex Materials Modeling

Simon Billinge

Materials with chemical, optical, and electronic properties driven by structures measuring billionths of a meter could lead to improved energy technologies—from more efficient solar cells to longer-lasting energy-dense batteries. Scientific instruments such as those at Brookhaven Lab’s Center for Functional Nanomaterials (CFN) and the just-opened National Synchrotron Light Source II (NSLS-II), both DOE Office of Science User Facilities, offer new ways to study materials at this nanometer length scale—including as they operate in actual devices.

These experiments produce huge amounts of data, revealing important details about materials. But right now scientists don’t have the computational tools they need to use that data for rational materials design—a step that’s essential to accelerate the discovery of materials with the performance characteristics required for real-world, large-scale implementation. To achieve that goal, what’s needed is a new way to combine the data from a range of experiments along with theoretical descriptions of materials’ behavior into valid predictive models that scientists can use to develop powerful new materials.

“Ideally, we’d like to be able to program in the properties we want…and have the model spit out the design for a new material …, but this is clearly impossible with unreliable models.”

— Brookhaven physicist Simon Billinge

“There is a very good chance that expertise in high-performance computing and applied mathematical algorithms developed with the support of DOE’s Office of Advanced Scientific Computing Research (ASCR) can help us make progress on this frontier,” said Simon Billinge, a physicist at Brookhaven National Laboratory and Columbia University’s School of Engineering and Applied Science. At the SC14 conference, Simon presented a potential solution that makes use of mathematical theory and computational tools to extract the information essential for strengthening models of material performance.

“A lot of the models we have for these materials are not robust,” he said. “Ideally, we’d like to be able to program in the properties we want—say, efficient solar energy conversion, superconductivity, massive electrical storage capacity—and have the model spit out the design for a new material that will have that property, but this is clearly impossible with unreliable models.”

Powerful experimental tools such as NSLS-II make possible more intricate experiments. But ironically, some of the new techniques make the discovery process more difficult.

enlarge

enlarge

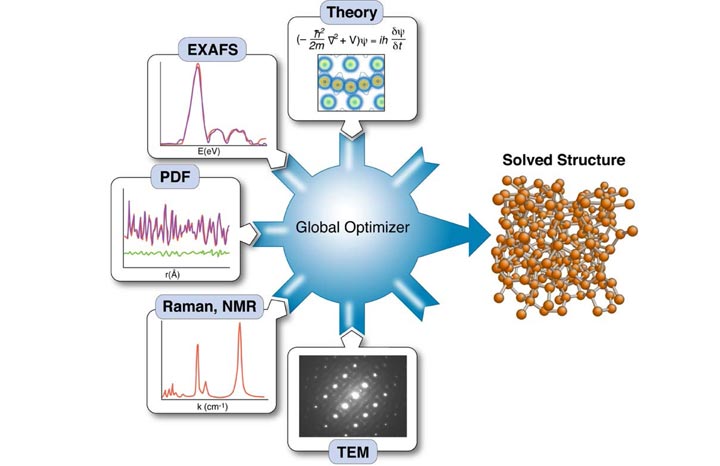

Scientists have demonstrated a way to increase the chances of solving material structures by feeding data from multiple experiments and theory into a "global optimizer" that uses mathematical algorithms to narrow the range of possible solutions based on its analysis of the complementary data sets.

Billinge explains: “Real materials and real applications depend on fine details of materials’ structure such as defects, surfaces, and morphology, so experiments that help reveal fine structural details are essential. But some of the most interesting materials are very complex, and then they are made into even more complex, multi-component devices. When we place these complex devices in an x-ray or neutron-scattering beamline, interactions of the beams with all the complex components produce overlapping results. You are looking for small signals from defects and surfaces hidden in a huge background of other information from the extra components, all of which degrades the useful information.”

At the same time, the complexity of the models the scientists are trying to build to understand these materials is also increasing, so they need more information about those essential details to feed into the model, not less. Solving these problems reliability, given all the uncertainties, requires advanced mathematical approaches and high-performance computing, so Simon and his collaborators are working with ASCR on a two-pronged approach to improve the process.

On the input side, they combine results from multiple experiments—x-ray scattering, neutron scattering, and also theory. On the output side, the scientists try to reduce what’s called the dimensionality of the model. Billinge explained this as being similar to the compression that creates an mp3 music file by shedding unessential information most people wouldn’t notice is missing.

enlarge

enlarge

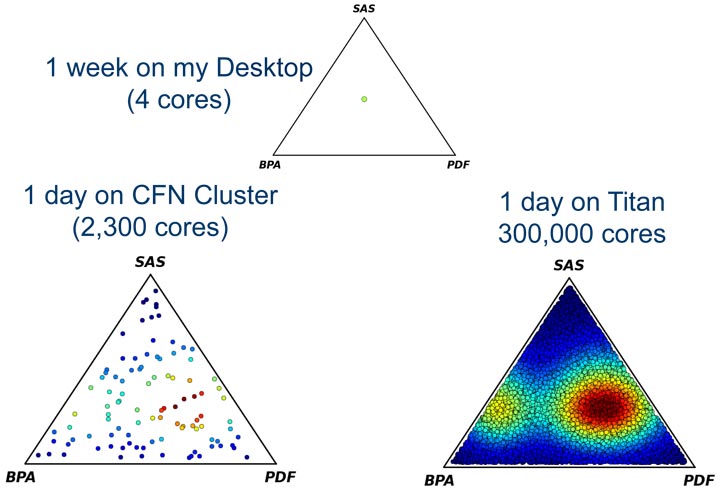

Finding possible solutions for a material's structure by global optimization of a model incorporating data from multiple experimental techniques is computationally expensive/time consuming, but must be done multiple times to check whether there is more than one solution compatible with the data. Each dot in these triangle diagrams represents a different way of combing inputs from the three experiments and the color indicates the number of structure solutions found for that combination (red being the most unique solution). Only when it is done for many dots can scientists zero in on the most likely common solution that accounts for all the data. It takes high performance computers to handle the scale of computation needed to give clear results.

“If we reduce the complexity to minimize the input needed to make the problem solvable, we can run it through powerful high-performance computers that use advanced mathematical methods derived from information theory, uncertainty quantification, and other data analytic techniques to sort through all the details,” he said. The mathematical algorithms can put back together the complementary information from the different experiments—sort of like the parable about the blind men exploring different parts of an elephant, but now sharing and combining their results—and use it to predict complex materials structure.

“Similar to an mp3 file, there is some missing information in these models. But with the right representation it can be good enough to have predictive value and allow us to design new materials,” Billinge said.

The materials science research program at Brookhaven Lab and operations of CFN and NSLS-II are funded by the DOE Office of Science (BES).

Brookhaven National Laboratory is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.

2015-5572 | INT/EXT | Newsroom