GPUs Power GE Code at OLCF Hackathons

Hackathons lead to speedups, increased efficiency for industry

September 19, 2019

enlarge

enlarge

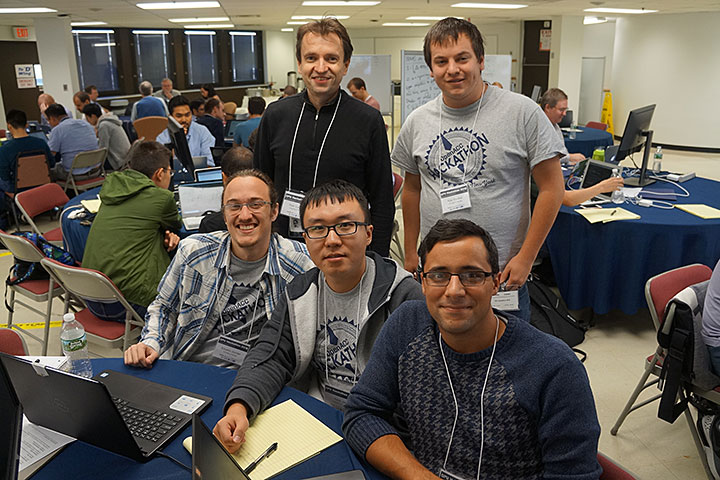

Pictured here are (back row) mentors Piotr Luszczek and Kyle Friedline with (front row) Eduardo Jourdan, Feilin Jia, and Carlos Velez at the Brookhaven National Laboratory Hackathon in September 2018. Image Credit: Brookhaven National Laboratory

This story was originally published on Sept. 12 by Oak Ridge Leadership Computing Facility (OLCF)—a U.S. Department of Energy Office of Science User Facility at Oak Ridge National Laboratory. It describes how research engineers at General Electric (GE) and their colleagues at the University of Kansas (KU) have been leveraging OLCF hackathons to optimize engine turbulence simulation codes to run on graphics processing units (GPUs). The GE and KU researchers attended their very first GPU hackathon at Brookhaven Lab in Sept. 2018. The next GPU hackathon—hosted by Brookhaven’s Computational Science Initiative and jointly organized with Oak Ridge and University of Delaware—will be held from Sept. 23–27, 2019.

The ability to simulate turbulent phenomena using high-performance computing (HPC) can provide industry with important insights for efficient engine design. Second only to the ability to perform these critical simulations is the speed at which they run. If a company can run a model more quickly, the number of possible design iterations increases, ultimately leading to a better end design.

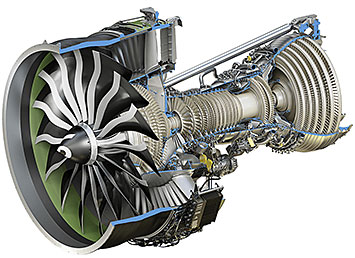

General Electric (GE) has used the leadership-scale HPC resources at the Oak Ridge Leadership Computing Facility (OLCF) since 2010 to determine how to make turbine engines for jets and power plants more energy efficient. A crystal ball of sorts, HPC can give companies such as GE a major competitive boost. The OLCF, a US Department of Energy (DOE) Office of Science User Facility located at DOE’s Oak Ridge National Laboratory, is home to the 200-petaflop IBM AC922 Summit, the world’s most powerful and smartest supercomputer for open science.

In addition to using the leadership supercomputers at the OLCF, GE recently began leveraging OLCF hackathons—intense workshops with domain scientists, software engineers, and compiler experts who mentor attendees on how to adapt their codes to use GPUs and take full advantage of Summit’s large-scale computing capabilities. Carlos Velez, lead research engineer in the Thermo-Sciences Organization at GE’s Global Research Center, attended two OLCF hackathons this year with University of Kansas (KU) colleagues, ultimately realizing speedups of 50- to 300-fold on GE’s GENESIS code and far greater scalability.

Putting the computation in CFD

To understand how an engine will behave, companies such as GE can use computational fluid dynamics (CFD) simulations to predict the behavior of the turbulence—the unsteady flow of air or water—around the rows of blades in large gas turbines and jet engines. These kinds of simulations require a massive amount of compute time because they must be solved over an extremely large range of length and timescales.

Even with the competitive speeds and scalability of current commercial CFD software suites—which predominantly run on CPUs—many of GE’s ultimate simulation and design challenges are out of reach. An entire multipassage, multirow turbine with around 1,000 individual blades would require years of continued HPC simulations to complete a single design using a commercial solver.

The GENESIS code, however, can tell researchers about how turbulence flows down the multiple rows of blades in one of its engines or turbines. GENESIS is built on the hp-adaptive Multi-physics Simulation Code (hpMusic) from ZJ Wang, Spahr Professor of Aerospace Engineering at KU.

The CFD calculations involved in such simulations typically employ a specific type of algorithm called the finite volume method, in which the averages taken for the variables in a predetermined space called a control volume are calculated based on the previous averages and characteristics of their neighbors.

But hpMusic/GENESIS operates a little differently. The code uses the flux reconstruction method, which combines the advantages of the finite volume and finite element methods. In a finite element method, multiple variables at a set of solution points in a predetermined space (called an element) are computed. Finite element codes are computationally intensive, and most of the operations require only local information, making them well-suited for GPUs. If a simulation was a weather event, the finite volume method would act as a lone storm chaser, but the finite element method would have 100 broadcasters in different locations reporting on the storm.

The hpMusic/GENESIS code—a powerhouse finite element CFD code—was seemingly tailor-made for GPUs. But the researchers first needed to enable hpMusic/GENESIS to run using GPUs.

“We didn’t have the experience running on GPUs, and even if we did, GE’s internal GPU resource is not like the OLCF’s Summit,” Velez said. “That’s one of the reasons we were motivated to try to gain access to some of the national laboratory supercomputers and to attend the OLCF hackathons.”

The GE team—in a strategic partnership with KU—decided to take the nonproprietary hpMusic code to an OLCF hackathon with the goal of merging a GPU-accelerated version of it into GE’s internal GENESIS code.

Speed and scale on Summit

The first OLCF hackathon that GE and KU researchers attended was at Brookhaven National Laboratory last fall. Because hpMusic ran solely on CPUs, the team’s goal was to get some part of it—specifically, a computationally intensive portion—to run on GPUs.

The scientists were successful, gaining a hundredfold speedup on a small piece of hpMusic using the CUDA programming model with the help of their hackathon mentors—Piotr Luszczek, research director at the University of Tennessee–Knoxville, and Kyle Friedline, research assistant at the University of Delaware—and NVIDIA representative Maximilian Katz. Over the next year at KU, Wang led a team of students who successfully ported the rest of hpMusic to GPUs.

“Whatever we achieve in hpMusic will be available very soon for GENESIS because of the ongoing partnership,” Wang said. But GE isn’t the only beneficiary. The hpMusic code could be licensed by other corporations and provide speedups to codes beyond GENESIS, such as those used in the aerospace industry.

GE was eager to port the rest of the GENESIS code to GPUs and scale it to run on many more nodes. Through the OLCF’s industry partnership program ACCEL, or Accelerating Competitiveness through Computational Excellence, the GE team applied for and was awarded a Director’s Discretionary allocation of time on Summit. The team has since completed numerous scaling studies.

“Using the GPUs on Summit, we experienced speedups in our scaling studies ranging from 50- to 300-fold,” Velez said. “That’s a month-long simulation done in just over 2 hours. That, for us, is a game changer.”

Velez and Wang said the resulting speedups will allow them to crank out more simulations in the same amount of time or to perform larger, higher-resolution simulations to capture the small scales needed to correctly predict turbulence.

GE's GENESIS solver (right) preserves many more wake details of interest in the flow field compared with a commercial solver (left). Image Credit: University of Kansas

“We’re used to simulations that are fast but very inaccurate,” Velez said. “Now we can get better predictive accuracy and higher resolution, which are needed for the kind of physics we’re trying to resolve. And we do it within a run time that fits very nicely within our design cycle.”

The GE researchers were eager to attend another hackathon to continue gaining experience for further code optimization.

“People were asking me, ‘Hey, when’s the second one? When are you going to the second one?’ It was a pull. There was no push needed,” Velez said.

So in June, the GE/KU team attended a second OLCF hackathon at MIT to further optimize hpMusic. To improve the code’s scaling efficiency, the team simultaneously increased its number of jobs and the number of GPUs running those jobs.

New avenues with GPUs

For Velez and Wang, the benefits of the hackathons have been multifaceted.

“The most difficult part has been learning the concepts behind GPU programming,” Velez said. “But once we absorbed the major learnings from these hackathons, we spread these concepts throughout hpMusic and subsequently GENESIS.”

It took time, but now GE can perform much more detailed high-fidelity simulations, giving the company a competitive advantage in the turbine market.

“The speedup obtained accelerating GENESIS by GPUs will change our intractable design challenges from far-distant goals to near-term simulation capabilities,” Velez said. “The hackathons have catapulted our ability to use GPUs, and that is helping us design more efficient turbines and jet engines, which has a direct impact on our competitiveness.”

The OLCF hackathons have also demonstrated the benefits of investing in GPU computing.

Dave Kepczynski, chief information officer for GE Research, said, “This hackathon provided us with invaluable performance data, demonstrating a 50- to 300-fold speedup and underscoring the importance of investing in GPUs and GPU-accelerated codes as we finalize the design for our next generation of internal HPC systems.”

Eric Tucker, senior director of Digital Technologies at GE Research, stated, “If done well, GPU-accelerated software can lead directly to higher-fidelity simulations and the ability to either increase the number of design alternatives or reduce the overall time considered associated with new product development.”

The team plans to attend at least one hackathon each year to leverage the mentors’ experience and expertise to help optimize the codes further.

“At the hackathons, we are learning from seasoned computer scientists who have been with GPUs from the beginning,” Velez said. “Their expertise is part of what makes these events truly invaluable.”

Contributors to this project include Friedline, Luszczek, Velez, Wang, Feilin Jia, Eduardo Jourdan, and Dheeraj Kapilavai.

UT-Battelle LLC manages Oak Ridge National Laboratory for DOE’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.

Brookhaven National Laboratory is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit https://energy.gov/science.

Follow @BrookhavenLab on Twitter or find us on Facebook.

2019-16797 | INT/EXT | Newsroom