Brookhaven Lab Hosts "Brookathon," a Five-Day GPU Hackathon

Teams of researchers, students, and software developers accelerate their scientific applications with graphics processing units (GPUs) for high-performance computing

July 5, 2017

enlarge

enlarge

From June 5 through 9, Brookhaven Lab's Computational Science Initiative hosted "Brookathon"—a hackathon (a combination of the words "hack" and "marathon" that describes the nonstop and exploratory nature of the programming event) focused on graphics processing units (GPUs). By offloading computationally intensive portions of an application code from the main central processing unit (CPU), GPUs allow applications to run much faster—an important capability for analyzing large data sets and running large numerical simulations. The 10 teams that attended Brookathon brought their own science codes to accelerate and were each mentored by two GPU experts.

On June 5, coding “sprinters”—teams of computational, theoretical, and domain scientists; software developers; and graduate and postdoctoral students—took their marks at the U.S. Department of Energy’s (DOE) Brookhaven National Laboratory, beginning the first of five days of nonstop programming from early morning until night. During this coding marathon, or “hackathon,” they learned how to program their scientific applications on devices for accelerated computing called graphics processing units (GPUs). Guiding them toward the finish line were GPU programming experts from national labs, universities, and technology companies who donated their time to serve as mentors. The goal by the end of the week was for the teams new to GPU programming to leave with their applications running on GPUs—or at least with the knowledge of how to do so—and for the teams who had come with their applications already accelerated on GPUs to leave with an optimized version.

The era of GPU-accelerated computing

enlarge

enlarge

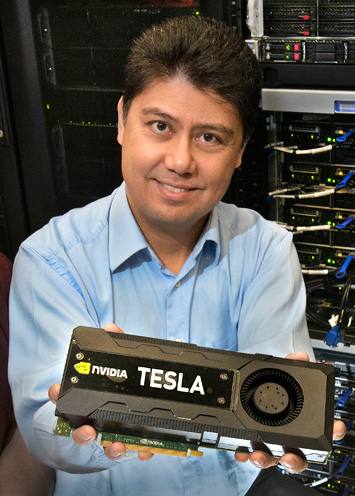

Nicholas D'Imperio, chair of Brookhaven Lab's Computational Science Laboratory, holds a graphics processing unit (GPU) made by NVIDIA.

However, while GPUs potentially offer a very high memory bandwidth (rate at which data can be stored in and read from memory by a processor) and arithmetic performance for a wide range of applications, they are currently difficult to program. One of the challenges is that developers cannot simply take the existing code that runs on a CPU and have it automatically run on a GPU; they need to rewrite or adapt portions of the code. Another challenge is efficiently getting data onto the GPUs in the first place, as data transfer between the CPU and GPU can be quite slow. Though parallel programming standards such as OpenACC and GPU advances such as hardware and software for managing data transfer make these processes easier, GPU-accelerated computing is still a relatively new concept.

A hackathon with a history

Here’s where “Brookathon,” hosted by Brookhaven Lab’s Computational Science Initiative (CSI) and jointly organized with DOE’s Oak Ridge National Laboratory, Stony Brook University, and the University of Delaware, came in.

“The architecture of GPUs, which were originally designed to display graphics in video games, is quite different from that of CPUs,” said CSI computational scientist Meifeng Lin, who coordinated Brookathon with the help of an organizing committee and was a member of one of the teams participating in the event. “People are not used to programming GPUs as much as CPUs. The goal of hackathons like Brookathon is to lessen the learning curve, enabling the use of GPUs on next-generation high-performance-computing (HPC) systems for scientific applications.”

Brookathon is the latest in a series of GPU hackathons that first began in 2014 at Oak Ridge Leadership Computing Facility (OLCF)—a DOE Office of Science User Facility that is home to the nation’s most powerful science supercomputer, Titan, and other hybrid CPU-GPU systems. So far, OLCF’s Fernanda Foertter, a HPC user support specialist and programmer, has helped organize and host 10 hackathons across the United States and abroad, including Brookathon and one at the Jülich Supercomputing Centre in Germany earlier this year.

Members of the organizing committee explain the motivation behind Brookathon and the other hackathons in the series, and participants and mentors discuss their experiences.

“Hackathons are intense team-based training events,” said Foertter. “The hope is that the teams go home and continue to work on their codes.”

The idea to host at Brookhaven started in May 2016, when Lin and Brookhaven colleagues attended their first GPU hackathon, hosted at the University of Delaware. There, they worked on a code for lattice quantum chromodynamics (QCD) simulations, which help physicists understand the interactions between particles called quarks and gluons. But in using the OpenACC programming standard, they realized it did not sufficiently support the C++ programming language that their code library was written in. Around this time, Brookhaven became a member of OpenACC so that CSI scientists could help shape the standard to include the features needed to support their codes on GPUs. Through the University of Delaware hackathon and weekly calls with OpenACC members, Lin came into contact with Foertter and Sunita Chandrasekaran, an assistant professor of computer science at the University of Delaware who organized that hackathon, both of whom were on board with bringing a hackathon to Brookhaven.

“Brookhaven had just gotten a computing cluster with GPUs, so the timing was great,” said Lin. “In CSI’s Computational Science Laboratory, where I work, we get a lot of requests from scientists around Brookhaven to get their codes to run on GPUs. Hackathons provide the intense hands-on mentoring that helps to make this happen.”

Teams from near and far

A total of 22 applications were submitted for a spot at Brookathon, half of which came from Brookhaven Lab or nearby Stony Brook University teams. According to Lin, Brookathon received the highest number of applications of any of the hackathons to date. Ultimately, a review committee of OpenACC members accepted applications from 10 teams, each of which brought a different application to accelerate on GPUs:

- Team AstroGPU from Stony Brook University: codes for simulating astrophysical fluid flows

- Team Grid Makers from Brookhaven, Fermilab, Boston University, and the University of Utah (Lin’s team): a multigrid solver for linear equations and a general data-parallel library (called Grid), both related to application development for lattice QCD under DOE’s Exascale Computing Project

- Team HackDpotato from Stony Brook University: a genetic algorithm for protein simulation

- Team Lightning Speed OCT (for optical coherence tomography) from Lehigh University: a program for real-time image processing and three-dimensional image display of biological tissues

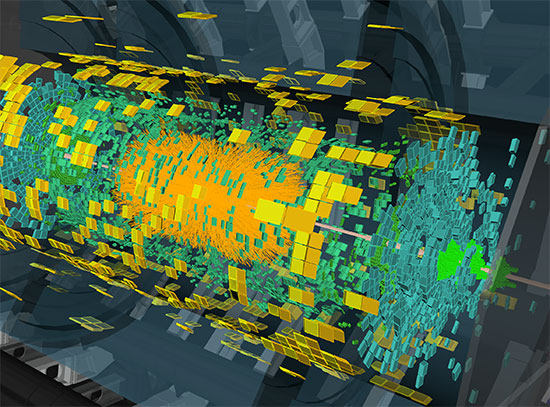

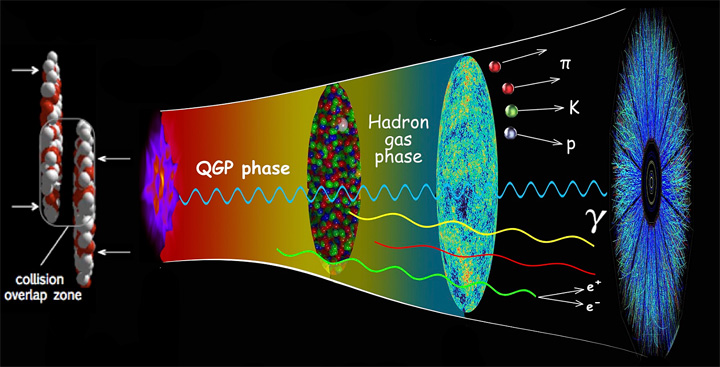

- Team MUSIC (for MUScl for Ion Collision) from Brookhaven and Stony Brook University: a code for simulating the evolution of the quark-gluon plasma produced at Brookhaven’s Relativistic Heavy Ion Collider (RHIC)—a DOE Office of Science User Facility

enlarge

enlarge

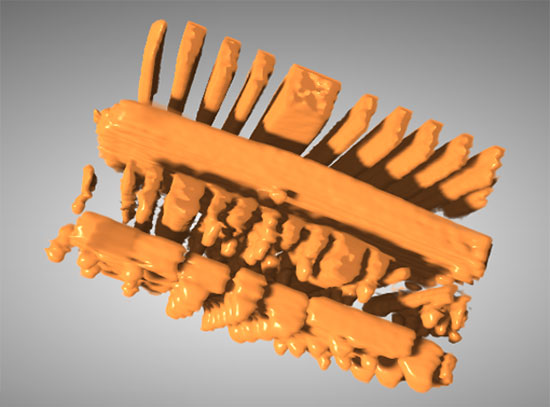

The MUSIC code package helps scientists simulate what happens when two nuclei collide at nearly the speed of light, as illustrated in the above figure. Immediately following the collision, the system is heated to an extremely high temperature, resulting in the creation of a state of matter that behaves as an almost-perfect liquid: the quark-gluon plasma (QGP) that was thought to have filled our universe microseconds after the Big Bang. As the system expands and cools, the nuclear matter evolves from the QGP phase to the hadron (a kind of composite particle composed of quarks and gluons) gas phase and eventually freezes out into particles that fly to detectors, where various properties of the particles are measured. MUSIC's numerical simulations allow scientists to "rewind" these measurements to the early stage of the collisions and study the QGP's transport properties (e.g., viscosity, heat conductivity). Programming MUSIC on GPUs will enable more quantitative comparisons with experimental data from Brookhaven's Relativistic Heavy Ion Collider.

- Team NEK/CEED from DOE’s Argonne National Laboratory, the University of Minnesota, and the University of Illinois Urbana-Champaign: fluid dynamics and electromagnetic codes (Nek5000 and NekCEM, respectively) for modeling small modular reactors (SMR) and graphene-based surface materials—related to two DOE Exascale Computing Projects, Center for Efficient Exascale Discretizations (CEED) and ExaSMR

- Team Stars from the STAR from Brookhaven, Central China Normal University, and Shanghai Institute of Applied Physics: an online cluster-finding algorithm for the energy-deposition clusters measured at Brookhaven’s Solenoidal Tracker at RHIC (STAR) detector, which searches for signatures of the quark-gluon plasma

- Team The Fastest Trigger of the East from the UK’s Rutherford Appleton Laboratory, Lancaster University, and Queen Mary University of London: software that reads out data in real time from 40,000 photosensors that collect light generated by neutrino particles, discards the useless majority of the data, and sends the useful bits to be written to disk for future analysis; the software will be used in a particle physics experiment in Japan (Hyper-Kamiokande)

- Team UD-AccSequencer from the University of Delaware: a code for an existing next-generation-sequencing tool for aligning thousands of DNA sequences (BarraCUDA)

- Team Uduh from the University of Delaware and the University of Houston: a code for molecular dynamics simulations, which scientists use to study the interactions between molecules

enlarge

enlarge

Each of the teams participating in the hackathon sat at a large round table to facilitate collaboration.

“The domain scientists—not necessarily computer science programmers—who come together for five days to migrate their scientific codes to GPUs are very excited to be here,” said Chandrasekaran. “From running into compiler and runtime errors during programming and reaching out to compiler developers for help to participating in daily scrum sessions to provide progress updates, the teams really have a hands-on experience in which they can accomplish a lot in a short amount of time.”

An intense week of mentoring

Each team had at least three members and worked on porting their applications to GPUs for the first time or optimizing applications already running on GPUs. As is the case in all of the hackathons, participants did not need to have prior GPU programming experience to attend the event. Two mentors were assigned to each team in the weeks preceding the hackathon to help the participants prepare. In addition to Brookhaven, mentors represented Cornell University; DOE’s Los Alamos, Sandia, and Oak Ridge national laboratories; Mentor Graphics Corporation; NVIDIA Corporation (also the top sponsor of the event); the Swiss National Supercomputing Centre; the University of Delaware; the University of Illinois; and the University of Tennessee, Knoxville.

enlarge

enlarge

The Brookathon mentors came from as close as Brookhaven Lab and as far away as the Swiss National Supercomputing Center. Fernanda Foertter (standing, leftmost) from Oak Ridge National Laboratory helped organize and host Brookathon and nine other hackathons since 2014. Brookhaven computational scientist Meifeng Lin (sitting, frontmost) coordinated Brookathon with the help of Foertter and the rest of the organizing committee, which consisted of Sunita Chandrasekaran (University of Delaware), Barbara Chapman (Brookhaven Lab/Stony Brook University), and Tony Curtis (Stony Brook University).

“You meet GPU experts at conferences but here you sit with them for a whole week as they share their expertise in a hands-on setting,” said Lin. “Because GPU computing is still fairly new to Brookhaven, we did not have a lot of local experts that could serve as mentors. We were fortunate to have Fernanda and Sunita help recruit such a great group of mentors.”

Many of the mentors who volunteered for Brookathon have developed GPU-capable compilers (computer programs that transform source code written in one programming language into instructions that computer processors can understand) and have helped define programming standards for HPC.

Yet they too can appreciate the difficulty in programming scientific applications on GPUs, as mentor Kyle Friedline, a research assistant in Chandrasekaran’s Computational Research and Programming Lab at the University of Delaware, noted: “My team’s code is really tough because of its large size and complex data structures that result in memory allocation problems.”

While most of the teams had prior experience in GPU programming, a few had to start with the basics. Especially for those novice teams, mentorship was key.

“All of our group members were new to GPU programming,” said MUSIC team member Chun Shen, a research associate in Brookhaven’s Nuclear Theory Group. “Our code was originally written in the C++ programming language with a rather complex class structure. We found that it was very hard to port the complex data structures to GPU with OpenACC, and the compiler did not provide us with useful error messages. Only with the support of our direct mentors and through fruitful discussions with other teams’ mentors were we able to simplify our code structure and successfully port our code to GPU within such a short amount of time.”

At the end of each day, team representatives gave presentations to the entire group so that anyone could chime in to offer advice, as many teams shared common challenges. On the last day, the teams gave final presentations describing their accomplishments over the week, lessons learned along the way, and plans going forward.

Continuing the GPU hackathon tradition

“The teams worked really hard with their mentors and accomplished a lot in five days,” said Lin. “By the end of the week, all 10 teams had their codes running on GPUs and eight of them achieved code speedups, as much as 150-fold, over the original codes. Even the mentors felt that they learned something, and some already expressed interest in serving again at future hackathons.”

To accommodate teams that were not admitted to Brookathon, Stony Brook University’s Institute for Advanced Computational Science, in partnership with NVIDIA, hosted a three-day mini GPU hackathon from June 26 through 28. And three more GPU hackathons in the regular series are already scheduled for 2017 so far—at the National Aeronautics and Space Administration in August, the Swiss National Supercomputing Center in September, and OLCF in October. In the meantime, the teams will continue porting their applications to GPUs.

Going forward, CSI plans to continue offering similar hands-on workshops as part of its initiative to tackle big data challenges.

“Brookhaven Lab faces tremendous challenges in processing and interpreting the increasing volumes of experimental, observational, and computational data that scientists are generating—this year, we expect to analyze more than 500 petabytes of scientific results,” said CSI Director Kerstin Kleese van Dam. “It is paramount to make optimal use of available novel architectures such as GPUs to meet these challenges. To help us in this endeavor, we are actively engaged in building communities of practice through events such as this hackathon and our annual New York Scientific Data Summit, which in 2017 will feature for the first time a session on performance for big data. We expect to host more events of this type in the future.”

Brookhaven National Laboratory is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.

2017-12273 | INT/EXT | Newsroom