Successful Deployment of Equipment to New Scientific Data & Computing Center

New location, new infrastructure, and new design bring new challenges and opportunities

April 11, 2022

enlarge

enlarge

Paul Metz (Technology Engineer), Enrique Garcia (Technology Customer Support Analyst), Imran Latif (Manager, Infrastructure & Ops), and John McCarthy (Technology Architect) stand in the Main Data Hall of the new Scientific Data and Computing Center.

Packing up and moving is an arduous task. Now imagine factoring in large pieces of costly, sensitive equipment, unpredictable weather events, and a global pandemic! These were just a few of the challenges facing the Scientific Data and Computing Center (SDCC) at the U.S. Department of Energy’s (DOE) Brookhaven National Laboratory as a team transported computing and networking hardware from their longstanding base in Building 515 to an upgraded facility housed in Building 725. While relocating this mission-critical equipment, the team also needed to simultaneously deploy additional new pieces, including five new racks and an advanced cooling system.

Such an effort was well worth it to turn the former National Synchrotron Light Source building into a state-of-the-art data storage and computing facility. The additional space and adaptable infrastructure in the new facility will be crucial to providing the data solutions of tomorrow, while seamlessly supporting the ongoing science of today.

The SDCC handles vast amounts of data with minimal downtime for several major research projects within and outside of Brookhaven Lab. “Those experiments can’t tolerate significant downtime in access to our computing resources,” explained Imran Latif, SDCC’s manager of infrastructure and operations. “To get this move done, we needed the cooperation of several teams and their unique areas of expertise.”

More science, more data

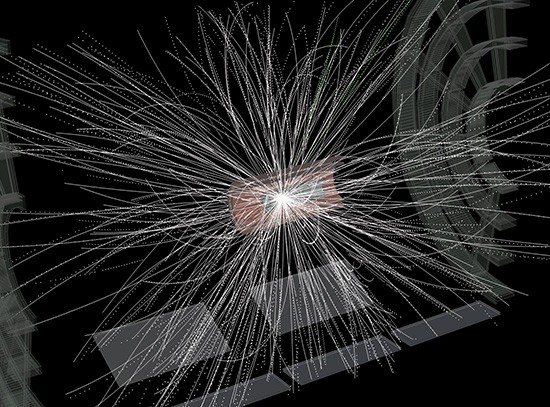

The original SDCC, formerly known as the RHIC and ATLAS Computing Facility, has grown and adapted to meet the Laboratory’s needs over the course of several decades. Its computers have played a crucial role in storing, distributing, and analyzing data for experiments at the Relativistic Heavy Ion Collider (RHIC)—a DOE Office of Science user facility for nuclear physics research at Brookhaven—as well as the ATLAS experiment at the Large Hadron Collider (LHC) at CERN in Switzerland and the Belle II experiment at Japan’s SuperKEKB particle accelerator. As research expands and accelerates at these facilities, so too does the volume of data. As of 2021, SDCC had stored ~215 petabytes (PB) of data from these experiments. If you were to translate that amount of data into full, high-definition video, it would take more than 700 years to watch through all of it continuously!

enlarge

enlarge

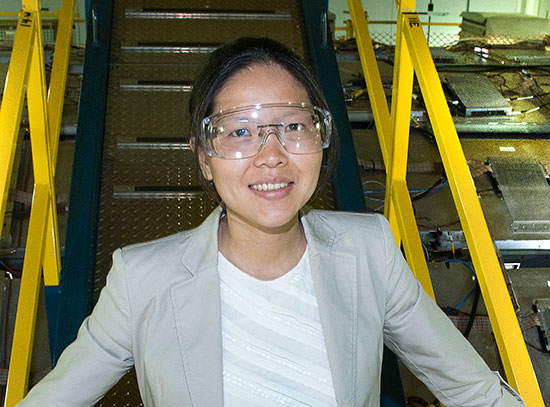

Imran Latif displays the rear door heat exchanger in a rack located within the Main Data Hall of Building 725.

At SDCC, all of the hardware components used to store and process data are stacked in specialized cabinet "racks" laid out in aisles, much like the shelves in a supermarket. Large, powerful pieces of computing equipment emit a lot of heat. You’ve probably felt the heat generated from a small laptop computer, and a typical PC is capable of generating at least twice that amount. So, you can imagine how hot an entire data center could get. The arrangement of server racks in rows helps maximize the airflow between them, but building these aisles out requires ample space. This has been a significant problem in Building 515, where the size, non-uniform layout, and aging infrastructure presented major challenges to scaling up.

The Main Data Hall that is being assembled in Building 725, where the equipment that made up the old synchrotron used to reside, has plenty of room to adapt and grow. Its modular layout also features a new power distribution system that can feed various racks of equipment with differing power demands. This allows equipment to be moved and reorganized almost anywhere within the data hall, if needed, to accommodate future projects.

As the SDCC grows, an important goal is to implement measures that will reduce the economic and ecological impacts of day-to-day operations. One way of achieving this is through more efficient cooling measures.

The original SDCC relied solely on a cooling system that circulates chilled air throughout an entire room via venting in the floor. The new facility, in contrast, uses a state-of-the-art system based on rear door heat exchangers (RDHx), which is a more modern and efficient option for cooling at this scale. RDHx systems are fixed directly to the back of each computing server rack, using chilled water to dissipate heat efficiently near the source. This system of direct heat control allows for servers to be clustered closer together, maximizing the use of the space.

A team effort

The process of receiving and installing sophisticated equipment, as well as building new infrastructure to house that equipment, relied on the cooperation and expertise of both internal laboratory staff and external vendors. Tight timelines needed to stretch in order to factor in safety measures, including keeping staff numbers low during the pandemic. The uncertainty of travel also complicated the process for vendors overseas, including UK vendor USystems, which provided the SDCC’s new cooling system.

The project hit three months of delays, but the group was determined to stay on track with each experiment’s schedule. ATLAS was a particular priority. It was a race to be ready for Run 3 of the LHC as they finalized their start date.

Mother Nature intervened as well.

“We were starting this all in the beginning of some intense weather last spring,” Latif said. He recalled how one particular part of the project—moving and installing several existing racks alongside five new ones scheduled for delivery—had to be done in the middle of a storm within a tight, 72-hour window. The weather event could have blocked out an entire day if they hadn’t gotten ahead of it.

“We all sat down together, the whole group, and we took a consensus on whether we could do this or not,” Latif recalled. “We took a calculated risk, while coordinating with our vendor from the UK.” With research, careful planning, and a little luck, “we were able to get everything inside the building before the worst of the storm hit.”

Setting up the new infrastructure and settling the hardware into its new home also required ample support from Brookhaven’s Facilities and Operations (F&O) team. Riggers, carpenters, and plumbers played an integral role in ensuring that the new space was ready in a timely manner.

“Without their hard work, this wouldn’t be possible; it was a team effort,” Latif said.

The path forward

Though the majority of the SDCC will now be situated in building 725, the legacy data center will still have a purpose. As the migration winds down in the fall of 2023, components of the Bldg. 515 facility will find new life as a hub for secondary data storage. The scale and functionality will remain limited for the foreseeable future, with only the legacy tape libraries and their associated servers remaining there.

Meanwhile, the relocated SDCC has an exciting year ahead. The physicists involved in the ATLAS experiment will resume collecting data for the LHC’s third run, taking advantage of the upgraded data center’s resources. There will also be plenty of data to analyze from nuclear physics experiments at RHIC, including the resumption of data collection from STAR and the upcoming sPHENIX detector. There are even plans to migrate data from experiments being run on the beamlines at the National Synchrotron Light Source II (NSLS-II), another DOE Office of Science User Facility. And, eventually, SDCC will take on data from the Electron-Ion Collider (EIC) .

Latif distilled the goal of the new SDCC facility into three succinct principles: “adaptability, scalability, and efficiency.” Designing with the future in mind opens up an exciting array of possibilities.

Upgrades to SDCC were supported by the DOE Office of Science. Operations at NSLS-II and RHIC are also supported by the Office of Science.

Brookhaven National Laboratory is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit science.energy.gov.

Follow @BrookhavenLab on Twitter or find us on Facebook.

2022-19365 | INT/EXT | Newsroom