Brookhaven Lab Advances its Computational Science and Data Analysis Capabilities

Using leading-edge computer systems and participating in computing standardization groups, Brookhaven will enhance its ability to support data-driven scientific discoveries

November 18, 2016

enlarge

enlarge

Members of the commissioning team—(from left to right) Imran Latif, David Free, Mark Lukasczyk, Shigeki Misawa, Tejas Rao, Frank Burstein, and Costin Caramarcu—in front of the newly installed institutional computing cluster at Brookhaven Lab's Scientific Data and Computing Center.

History of scientific computing at Brookhaven

Brookhaven Lab has a long-standing history of providing computing resources for large-scale scientific programs. For more than a decade, scientists have been using data analytics capabilities to interpret results from the STAR and PHENIX experiments at the Relativistic Heavy Ion Collider (RHIC), a DOE Office of Science User Facility at Brookhaven, and the ATLAS experiment at the Large Hadron Collider (LHC) in Europe. Each second, millions of particle collisions at RHIC and billions at LHC produce hundreds of petabytes of data—one petabyte is equivalent to approximately 13 years of HDTV video, or nearly 60,000 movies—about the collision events and the emergent particles. More than 50,000 computing cores, 250 computer racks, and 85,000 magnetic storage tapes store, process, and distribute these data that help scientists understand the basic forces that shaped the early universe. Brookhaven’s tape archive for storing data files is the largest one in the United States and the fourth largest worldwide. As the U.S. ATLAS Tier 1 computing center—the largest one worldwide—Brookhaven provides about 25 percent of the total computing and storage capacity for LHC’s ATLAS experiment, receiving and delivering approximately two hundred terabytes of data (picture 62 million photos) to more than 100 data centers around the world each day.

enlarge

enlarge

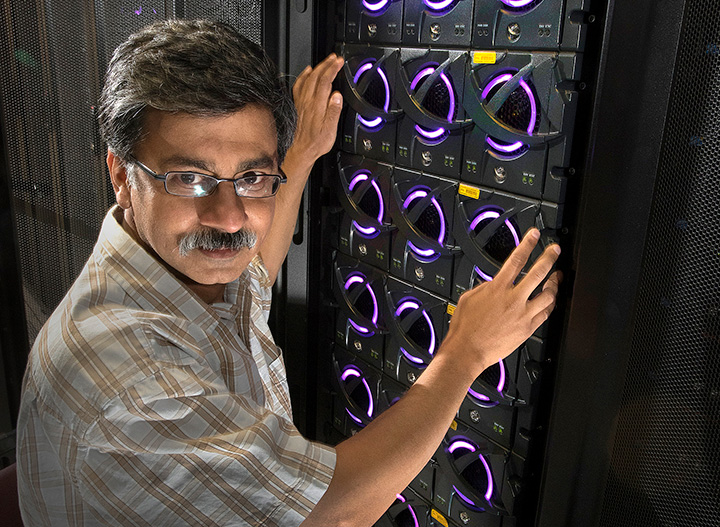

Physicist Srinivasan Rajagopalan with hardware located at Brookhaven Lab that is used to support the ATLAS particle physics experiment at the Large Hadron Collider of CERN, the European Organization for Nuclear Research.

Brookhaven Lab has a more recent history in operating high-performance computing clusters specifically designed for applications involving heavy numerical calculations. In particular, Brookhaven has been home to some of the most powerful supercomputers, including three generations of supercomputers from the New York State–funded IBM Blue Gene series. One generation, New York Blue/L, debuted at number five on the June 2007 top 500 list of the world’s fastest computers. With these high-performance computers, scientists have made calculations critical to research in biology, medicine, materials science, nanoscience, and climate science.

enlarge

enlarge

New York Blue/L is a massively parallel supercomputer that Brookhaven Lab acquired in 2007. At the time, it was the fifth most powerful supercomputer in the world. It was decommissioned in 2014.

Advanced tools for solving large, complex scientific problems

Brookhaven is now revitalizing its capabilities for computational science and data analysis so that scientists can more effectively and efficiently solve scientific problems.

“All of the experiments going on at Brookhaven’s facilities have undergone a technological revolution to some extent,” explained Kleese van Dam. This is especially true with the state-of-the-art National Synchrotron Light Source II (NSLS-II) and the Center for Functional Nanomaterials (CFN)—both DOE Office of Science User Facilities at Brookhaven—each of which continues to attract more users and experiments.

“The scientists are detecting more things at faster rates and in greater detail. As a result, we have data rates that are so big that no human could possibly make sense of all the generated data—unless they had something like 500 years to do so!” Kleese van Dam continued.

In addition to analyzing data from experimental user facilities such as NSLS-II, CFN, RHIC, and ATLAS, scientists run numerical models and computer simulations of the behavior of complex systems. For example, they use models based on quantum chromodynamics theory to predict how elementary particles called quarks and gluons interact. They can then compare these predictions with the interactions experimentally studied at RHIC, when the particles are released after ions are smashed together at nearly the speed of light. Other models include those for materials science—to study materials with unique properties such as strong electron interactions that lead to superconductivity, magnetic ordering, and other phenomena—and for chemistry—to calculate the structures and properties of catalysts and other molecules involved in chemical processes.

To support these computationally intensive tasks, Brookhaven recently installed at its Scientific Data and Computing Center a new institutional computing system from Hewlett Packard Enterprise (HPE). This institutional cluster—a set of computers that work together as a single integrated computing resource—initially consists of more than 100 compute nodes with processing, storage, and networking capabilities.

Each node includes both central processing units (CPUs)—the general-purpose processors commonly referred to as the “brains” of the computer—and graphics processing units (GPUs)—processors that are optimized to perform specific calculations. The nodes have error-correcting code memory, a type of data storage that detects and corrects memory corruption caused, for example, by voltage fluctuations on the computer’s motherboard. This error-correcting capability is critical to ensuring the reliability of Brookhaven’s scientific data, which are stored in different places on multiple hard disks so that reading and writing of the data can be done more efficiently and securely. A “file system” software separates the data into groups called “files” that are named so they can be easily found, similar to how paper documents are sorted and put into labeled file folders. Communication between nodes is enabled by a network that can send and receive data at 100 gigabytes per second—a data rate fast enough to copy a Blu-ray disc in mere seconds—with less than microseconds between each unit of data transferred.

This time-lapse video shows the installation of the new institutional computing cluster.

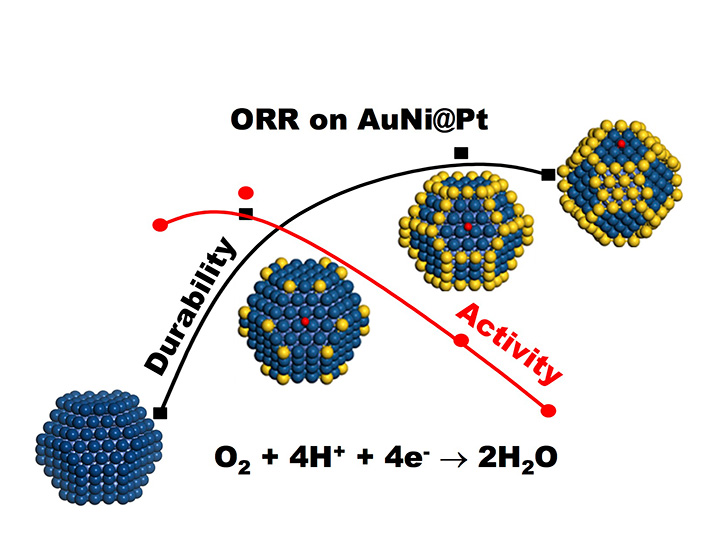

“As the complexity of a material increases, more computer resources are required to efficiently perform quantum mechanical calculations that help us understand the material’s properties. For example, we may want to sort through all the different ways that lithium ions can enter a battery electrode, determining the capacity of the resulting battery and the voltage it can support,” explained Mark Hybertsen, leader of the CFN’s Theory and Computation Group. “With the computing capacity of the new cluster, we’ll be able to conduct in-depth research using complicated models of structures involving more than 100 atoms to understand how catalyzed reactions and battery electrodes work.”

enlarge

enlarge

This figure shows the computer-assisted catalyst design for the oxygen reduction reaction (ORR), which is one of the key challenges to advancing the application of fuel cells in clean transportation. Theoretical calculations based on nanoparticle models provide a way to not only speed up this reaction on conventional platinum (Pt) catalysts and enhance their durability, but also to lower the cost of fuel cell production by alloying (combining) Pt catalysts with the less expensive elements nickel (Ni) and gold (Au).

“With these additional nodes, we’ll be able to serve a wider user community. Users don’t get just a share of the system; they get to use the whole system at a given time so that they can address very large scientific problems,” Kleese van Dam said.

Data-driven scientific discovery

Brookhaven is also building a novel computer architecture test bed for the data analytics community. Using this test bed, CSI scientists will explore different hardware and software, determining which are most important to enabling data-driven scientific discovery.

Scientists are initially exploring a newly installed Koi Computers system that comprises more than 100 Intel parallel processors for high-performance computing. This system makes use of solid-state drives—storage devices that function like a flash drive or memory stick but reside inside the computer. Unlike traditional hard drives, solid-state drives do not consecutively read and write information by moving a tiny magnet with a motor; instead, the data are directly stored on electronic memory chips. As a result, solid-state drives consume far less power and thus would enable Brookhaven scientists to run computations much more efficiently and cost-effectively.

“We are enthusiastic to operate these ultimate hardware technologies at the SDCC [Scientific Data and Computing Center] for the benefit of Brookhaven research programs,” said SDCC Director Eric Lançon.

Next, the team plans to explore architectures and accelerators that could be of particular value to data-intensive applications.

For data-intensive applications, such as analyzing experimental results using machine learning, the system’s memory input/output (I/O) rate and compute power are important. “A lot of systems have very slow I/O rates, so it’s very time consuming to get the data, only a small portion of which can be worked on at a time,” Kleese van Dam explained. “Right now, scientists collect data during their experiment and take the data home on a hard drive to analyze. In the future, we’d like to provide near-real-time data analysis, which would enable the scientists to optimize their experiments as they are doing them.”

Participation in computing standards groups

In conjunction to updating Brookhaven’s high-performance computing infrastructure, CSI scientists are becoming more involved in standardization groups for leading parallel programming models.

“By participating in these groups, we’re making sure the high-performance computing standards are highly supportive of our scientists’ requirements for their experiments,” said Kleese van Dam.

Currently, CSI scientists are in the process of applying to join the OpenMP (for Multi-Processing) Architecture Review Board. This nonprofit technology consortium manages the OpenMP application programming interface (API) specification for parallel programming on shared-memory systems—those in which individual processes can communicate and share data by using a common memory.

In June 2016, Brookhaven became a member of the OpenACC (short for Open Accelerators) consortium. As part of this community of more than 20 research institutions, supercomputing centers, and technology developers, Brookhaven will help determine the future direction of the OpenACC programming standard for parallel computing.

enlarge

enlarge

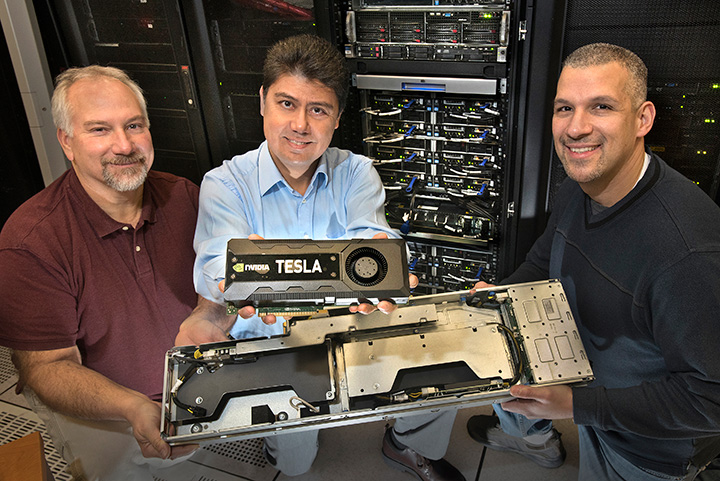

(From left to right) Robert Riccobono, Nicholas D'Imperio, and Rafael Perez with a NVIDIA Tesla graphics processing unit (GPU) and a Hewlett Packard compute node, where the GPU resides.

“From imaging the complex structures of biological proteins at NSLS-II, to capturing the real-time operation of batteries at CFN, to recreating exotic states of matter at RHIC, scientists are rapidly producing very large and varied datasets,” said Kleese van Dam. “The scientists need sufficient computational resources for interpreting these data and extracting key information to make scientific discoveries that could lead to the next pharmaceutical drug, longer-lasting battery, or discovery in physics.”

As an OpenACC member, Brookhaven Lab will help implement the features of the latest C++ programming language standard into OpenACC software. This effort will directly support the new institutional cluster that Brookhaven purchased from HPE.

“When programming advanced computer systems such as the institutional cluster, scientific software developers face several challenges, one of which is transferring data resident in main system memory to local memory resident on an accelerator such as a GPU,” said computer scientist Nicholas D'Imperio, chair of Brookhaven's Computational Science Laboratory for advanced algorithm development and optimization. “By contributing to the capabilities of OpenACC, we hope to reduce the complexity inherent in such challenges and enable programming at a higher level of abstraction.”

A centralized Computational Science Initiative

These standards development efforts and technology upgrades come at a time when CSI is bringing all of its computer science and applied mathematics research under one roof. In October 2016, CSI staff moved into a building that will accommodate a rapidly growing team and will include collaborative spaces where Brookhaven Lab scientists and facility users can work with CSI experts. The building comprises approximately 60,000 square feet of open space that Brookhaven Lab, with the support of DOE, will develop into a new data center to house its growing data, computing, and networking infrastructure.

“We look forward to the data-driven scientific discoveries that will come from these collaborations and the use of the new computing technology,” said Kleese van Dam.

Brookhaven National Laboratory is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.

2016-6470 | INT/EXT | Newsroom