VISION: Voice-Controlled AI for Next-Gen Scientific Experimentation

October 21, 2025

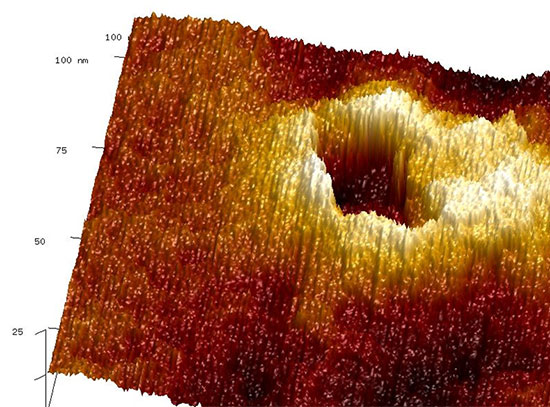

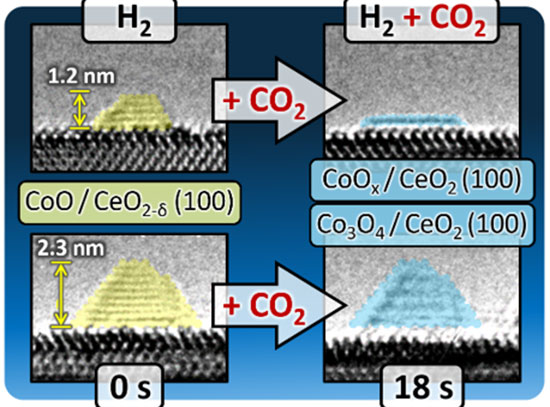

enlarge

enlarge

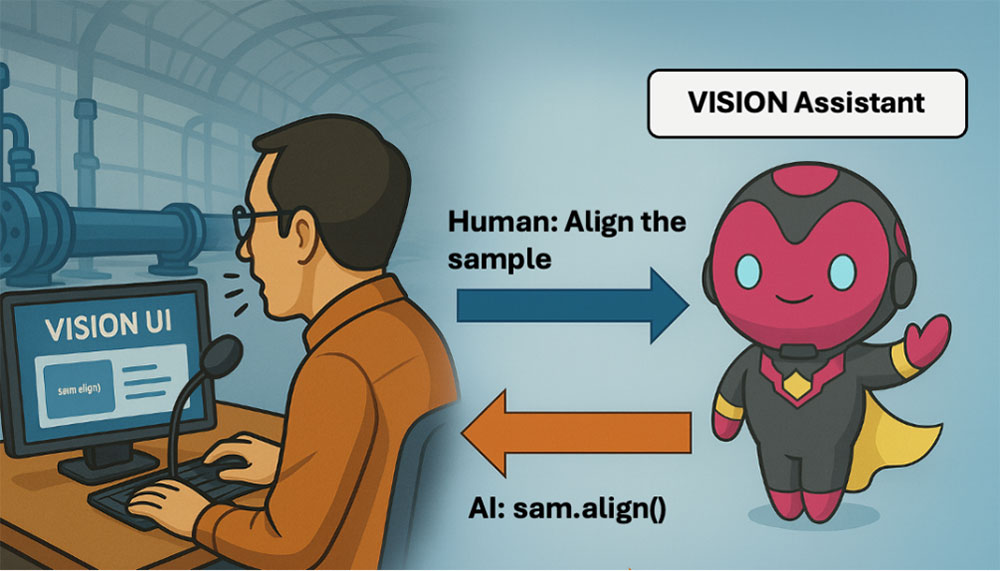

VISION is an AI assistant that facilitates natural language communication between humans and scientific instrumentation.

Scientific Achievement

VISION pioneers the first voice-controlled beamline experiment using a modular AI assistant, enabling natural language-driven instrument operation and real-time analysis.

Significance and Impact

This advance showcases how AI can seamlessly extend human interaction with complex scientific instruments, laying foundational infrastructure for AI-augmented scientific discovery.

Research Details

This work introduces VISION, a modular, large-language-model-based assistant designed to enable natural, human-like interaction with complex scientific instruments, specifically synchrotron beamlines. Rather than relying on scripts or manual control, researchers can now speak or type in natural language to control beamline hardware, perform data collection, and run analysis, marking the first-ever voice-controlled experiment at an x-ray scattering facility. VISION adopts a modular architecture in which specialized AI components manage distinct tasks. This design enables integrated experiment control through a single interface, with computation handled on separate hardware for efficient deployment. VISION achieves fast inference times of a few seconds to translate voice or text queries into instrument code, dramatically speeding up the process compared to manual coding and serving as an effective learning and drafting tool. VISION is presented as part of a broader initiative toward a “scientific exocortex”, an ecosystem of interconnected AI agents designed to accelerate scientific discovery and enhance human cognition.

- Tailored voice transcription model to handle domain-specific jargon.

- Evaluated multiple AI models for instrument operation.

- Built a graphical user interface for natural language interactions between users and AI.

- VISION is a stepping stone towards the ambitious idea of a "Science Exocortex" —- a team of AI assistants that work together to expand the scope of research that scientists can tackle.

Publication Reference

Mathur, Shray, Noah van der Vleuten, Kevin G. Yager, and Esther HR Tsai. "VISION: a modular AI assistant for natural human-instrument interaction at scientific user facilities." Machine Learning: Science and Technology 6, no. 2 (2025): 025051.

DOI: https://doi.org/10.1088/2632-2153/add9e4

Press Release: https://www.bnl.gov/newsroom/news.php?a=122449

Acknowledgment of Support

The work was supported by a DOE Early Career Research Program. This research also used beamline 11BM (CMS) of the National Synchrotron Light Source II and utilized the x-ray scattering partner user program at the Center for Functional Nanomaterials (CFN), both of which are U.S. Department of Energy (DOE) Office of Science User Facilities operated for the DOE Office of Science by Brookhaven National Laboratory under Contract No. DE-SC0012704. We thank beamline scientist Dr. Ruipeng Li for consulting the project and his support at the beamline and Dr. Lee Richter for providing the polymer sample for the beamtime.

2025-22699 | INT/EXT | Newsroom